You are here

The Challenges of Social Robotics

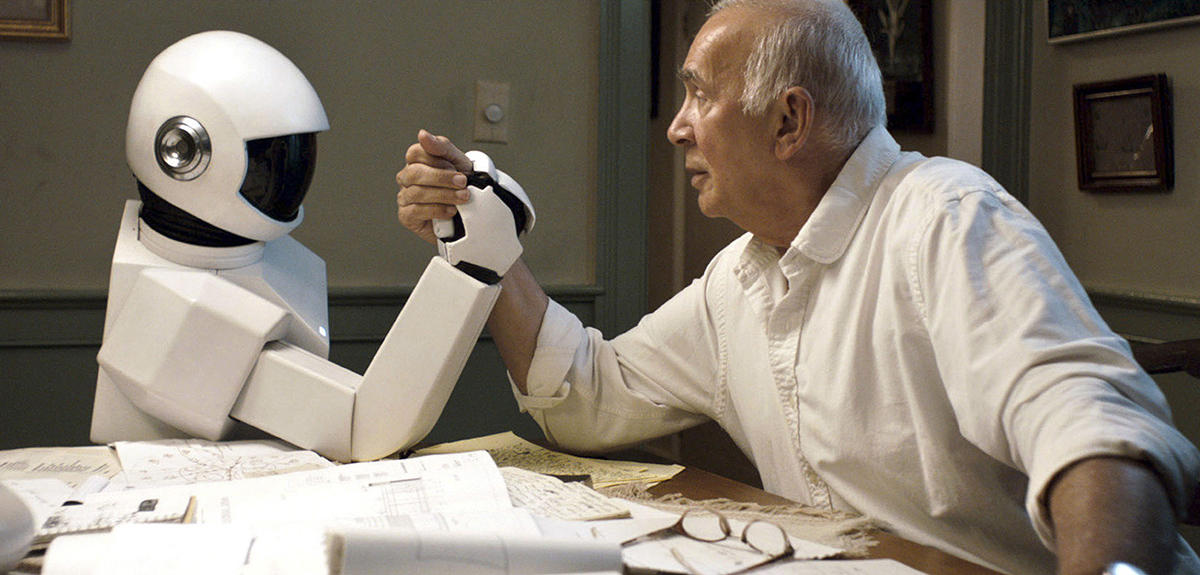

Ever since Véronique Aubergé was recruited to the Grenoble Informatics Laboratory (LIG)1 in 2012, this language specialist has been obsessed with social robots, or the way in which humans develop relationships with machines, and the nature of those relationships. With her team, the researcher—who was involved in the creation of the first 'Robotics and Ethics' chair in France in 2016—has decided to target a specific group: isolated elderly people. She is convinced that robots will be able to help restore social bonds by 'retraining' this population group to connect with others. On the day we met, her team was actually preparing to host a retired woman from Grenoble in the “laboratory’s living lab”—a life-size three-room apartment in which she will be left alone with a strange-looking robot on wheels. At the heart of the control room, where screens and switches make it possible to orchestrate the experiment “behind the scenes,” a truly passionate researcher told us about her work.

Before turning to the robots, let’s talk about your background. You have always been fascinated by language. When did you develop this near-obsession?

Véronique Aubergé: When I was a teenager, I wanted to become a dancer; I was even accepted at the opera ballet school in Geneva. I was 14 and I wanted to understand how, with equal skills, two dancers performing the same figure did not produce the same impression on the public. I hoped to find out what gracefulness was. It is a bit like charisma: by employing almost the same expressions, some people will win over their audience, while others will fail to do so. Aside from the vocabulary used, what makes a Gandhi or a de Gaulle? My intuition was that this type of communication, which goes beyond words, beyond the alphabet of dance figures, would be easier to fathom through dance than linguistics. This is why I wanted to be a professional dancer and to do linguistics 'for fun.' But life decided otherwise: my body gave in and I had to switch my priorities.

And yet you studied computer science before turning to language…

V. A.: I took a detour before embracing linguistics. I was good at math, so I didn’t really have a choice: I was steered towards advanced mathematics, and then went to university where I followed a curriculum of math and computer science. My stroke of luck was that I had to work to pay for my studies, so I went around the labs in Grenoble and was taken on at the Institute of Phonetics (which has since become the Institute of Spoken Communication), where I stayed for thirty years! By the second year of my degree, I had already landed my first research contract with the National Center for Telecommunication Studies (Cnet): I developed a small program to perform signal processing and learn the most remarkable aspects of speech with a phonetician. Alongside my curriculum in hard science, I attended phonetics and psychology courses to get a better understanding of the speaking individual. It was quite unheard-of at the time, because these disciplines didn’t actually 'communicate' with one another.

You have focused on a particular feature of language: prosody. Could you give us a concise definition of this term?

V. A.: Prosody is the music of words: rhythm, intonation, silences, emotion… Everything that is neither vocabulary nor structure. We can make ourselves understood merely by sighing, which clearly proves that words are of secondary importance in communication between individuals. A three-month-old baby does not have a command of language, but already has a rather fine prosody: we know that he is hungry, tired, or having a tantrum. My goal has always been to dissect oral language to reveal its innermost workings. It’s a protracted process: thirty years on, I’m still at it!

You have shown particular interest in the learning of a second language. Why?

V. A.: In order to identify the mechanisms of spoken language, I decided to tackle situations where it is deficient. For that reason, I concentrated on communication pathologies, and in particular language-related ones resulting, for example, from the learning of a foreign language. For this reason, I spent a lot of time investigating the prosody of Mandarin, Vietnamese, English, and also Japanese… When you practice a language that isn’t your mother tongue, you develop pathologies of expression that can give rise to serious misunderstandings: you may have a perfect command of the vocabulary and syntax, but not master the prosody at all… This clearly shows that prosody is where communication develops. The tone of a Japanese person who intends to express extreme politeness will thus be perceived by a Westerner as arrogant and authoritarian, and the more the Japanese speaker, noticing this unease, tries to be polite, the more the Westerner will bristle… When I was 12, I saw Kurosawa’s Seven Samurai: to my young French girl’s ear, these men, who were totally submissive to the emperor, sounded very rude when they addressed him. I thought to myself: there’s something fishy there!

At the same time, you have become an acknowledged specialist of speech synthesis.

V. A.: Yes, that was in fact my first proper research project, leading in particular to the construction of speech synthesis systems in French. When you create devices that reproduce human speech, you have to know how that speech works. To carry out these projects, I mainly worked with manufacturers: they don’t care about theory, they want results—and that is a strong motivation for a researcher. I did my DEA on speech synthesis at the Cnet, and my thesis with the company Oros, which in the 1980s was running a European project in this area. Because they were particularly intelligible, my synthesis systems earned me a certain level of recognition. The Cnet and Bell Labs even wanted to hire me. But I myself wasn’t satisfied with these systems: I felt they weren’t being evaluated on the right criteria, because we quite simply hadn’t understood human communication.

What was wrong with speech synthesis systems at the time?

V. A.: No one thought about the effect a synthesized voice produces on the human it is interacting with. For example, if a system imitates the speech of an air hostess, it is necessary to know why that voice is used and what the consequences are on the human listening to it. The film Her, by Spike Jonze, which was released in 2014, is a good illustration: the hero falls in love with Samantha, an intelligent, intuitive, and surprisingly funny female synthesized voice… who, after a while, admits to being in love with 637 other men! The hero had eventually forgotten that he was talking to a machine.

Your arrival at the Grenoble Informatics Laboratory in 2012 gave a new twist to your research…

V. A.: Having worked as a researcher at the Institute of Spoken Communication for almost thirty years, I now find myself for the first time in a 100% computer science laboratory, but one that has found room for my skills in the human and social sciences. This opened up a new field to me, robotics, while also enabling me to pursue my old obsession: understanding what—above and beyond words and the information they convey—creates connection in spoken communication and what I call the 'socio-affective glue.' I hope that robots will finally help me understand what it is that connects people together, and perhaps to even repair this connection when it is damaged… My project is to examine the interactions at play (or not) between a robot and a person who has difficulties creating social connections. For this reason, I chose to initially work with elderly people living in isolation. Some very isolated individuals do actually lose the 'user manual' for social relations and come across as unpleasant to their carers or children when they come to visit them. We know that isolated people are at a five-fold risk of developing physical or neurological pathologies.

How do you build your social robotics experiments?

V. A.: My aim is to elucidate how people become attached to robots, how they display this attachment, and whether it is reproducible. I therefore created an observation protocol with my team. We use the Domus Living Lab, a small three-room apartment recreated at our premises, where all the controls (raising or lowering the blinds, switching on the electric kettle, the television, or the lights) are activated via a 30-centimeter-high home automation robot on wheels called Emox, provided by the company Awabot to investigate the effect of a robot on the humans around it. To this end, we observe elderly people interacting with Emox in real-life conditions. So as not to influence the experiment, we don’t tell them the real reason for their visit to the Domus but pretend to be testing a pilot apartment for dependent people.

What happens during these visits?

V. A.: We explain to our hosts that Emox is a voice-controlled robot and that they have to give it direct instructions—'switch on the television,' 'put the kettle on'—to make the appliances in the apartment work. The robot follows them docilely from one room to another. Generally, two scenarios arise: if the person is not very isolated, they will find the robot entertaining for a while but will quickly lose interest in it; if they are very isolated, it will take them some time to pay attention to Emox, other than to give it a few instructions, but the exchanges will gradually intensify to become very sustained. For this experiment, we chose to use a robot with limited language capacities that makes little mouth sounds, produces onomatopoeia, and utters two or three short sentences such as 'Like that?' or 'Anything I can do?'

Our hypothesis is that these small sounds are powerful enough to create the 'socio-affective glue' I previously mentioned between the human and the robot. In fact, when our elderly visitors provide feedback on using the apartment, they spontaneously bring up the presence of the robot within the first few minutes, and always come to the same conclusion: “It’s good for someone on their own … ” This confirms our hypothesis that Emox could help to repair social connections by gradually training the elderly to become reaccustomed to social contact with others.

And yet you are not in favor of 'companion' machines…

V. A.: There can be no question of making robots substitutes for human presence. An automaton designed with the sole purpose of keeping someone company is extremely dangerous as long as we do not know exactly what is at play in the interaction with humans. Hence the importance for the robot to have a well-defined role, which cannot be assumed by a human: home automation, as is the case with Emox, or the purification of air inside buildings. I am not taking that example at random: we have forged a partnership with the manufacturer Partnering Robotics, which sells Diya One, an air purifier, to companies, and wants to find out why employees quite unexpectedly start interacting with it. Some of them think it’s 'sweet' because it moves out of their way (it is programmed to avoid obstacles), while others find it 'rude' or 'shifty.' Partnering Robotics would like to be able to elucidate and manage these reactions. Human beings are such that they cannot help considering the objects in their environment from an anthropomorphic perspective.

Emox is not the only robot you are testing in your laboratory…

V. A.: That’s right. We are running another project on telepresence robots, especially those used in schools to enable sick children to 'attend' their classes from hospital. We are working on models that are already on the market, such as Bean or VGo—which are essentially teleoperated videoconferencing systems. But we want to go further, and the laboratory’s Fab Lab is developing our prototype telepresence robot, RobAIR, which will be trialed in a class in the Dijon school district (northeastern France) from February 2017. Indeed, pupils do not simply listen to the teacher—they glance at or whisper to their neighbors, for example. These interactions are an integral part of their attentional system and condition their motivation to learn. RobAIR should therefore offer three different points of contact: a vocal touch, which allows absent pupils to whisper in their neighbor’s ear; a visual touch that enables them to glance at another child (to make this possible, the robot is equipped with flashing LEDs); and, lastly, a tactile touch, since RobAIR is equipped with 'hugs,' active zones located in its 'back,' which those in class will be able to press and the absent pupil to feel thanks to a vibrating noise.

Your academic career has been somewhat unconventional, and remains so even today…

V. A.: Yes, I’m a kind of oddity at university in that I do indeed have the qualifications to conduct research in computer science, signal processing, communication science, and language science. But I had to strive for that, by attending a whole lot of courses at the same time. Fortunately, things are starting to change… On a personal level, I have fought hard for disciplines as foreign to one another as the language sciences and IT (which everyone actually calls language!) to be able to finally 'communicate' within the same curriculum. Today, I head the IT department for languages, arts, and language at Grenoble’s arts university—a small revolution! Within this department, training on automatic language processing and the language industry are particularly sought-after by manufacturers and laboratories, who actually approach us to 'book' students. That's one of things I am most proud of.

- 1. CNRS / Grenoble INP / INRIA / Université Grenoble Alpes.