You are here

Forthcoming revolution will unveil the secrets of matter

One quintillion operations per second. Exaflop computers – from the prefix -exa or 1018, and flops, the number of floating-point operations that a computer can perform in one second – will offer this colossal computing power, as long as specifically designed programs and codes are available. An international race is thus underway to produce these impressive machines, and to take full advantage of their capacities. The European Commission is financing ambitious projects that are preparing the way for exascale, which is to say any form of high-performance computing that reaches an exaflop. The Targeting Real chemical precision at the EXascale (TREX)1 programme focuses on highly precise computing methods in the fields of chemistry and materials physics.

Officially inaugurated in October 2020, TREX is part of the broader European High Performance Computing (EuroHPC) joint undertaking, whose goal is to ensure Europe is a player alongside the United States and China in exascale computing. "The Japanese have already achieved exascale by lowering computational precision," enthuses Anthony Scemama, a researcher at the LCPQ,2 and one of the two CNRS coordinators of TREX. "A great deal of work remains to be done on codes if we want to take full advantage of these future machines."

Exascale computing will probably use GPUs as well as traditional processors, or CPUs. These graphics processors were originally developed for video games, but they have enjoyed increasing success in data-intensive computing applications. Here again, their use will entail rewriting programs to fully harness their power for those applications that will need it.

"Chemistry researchers already have various computing techniques for producing simulations, such as modelling the interaction of light with a molecule," Scemama explains. "TREX focuses on cases where the computing methods for a realistic and predictive description of the physical phenomena controlling chemical reactions are too costly."

"TREX is an interdisciplinary project that also includes physicists," stresses CNRS researcher and project coordinator Michele Casula, at the IMPMC.3 "Our two communities need computing methods that are powerful enough to accurately predict the behaviour of matter, which often requires far too much computation time for conventional computers."

The TREX team has identified several areas for applications. First of all, and surprising though it may seem, the physicochemical properties of water have not been sufficiently modelled. The best ab initio simulations – those based on fundamental interactions – are wrong by a few degrees when trying to estimate its boiling point.

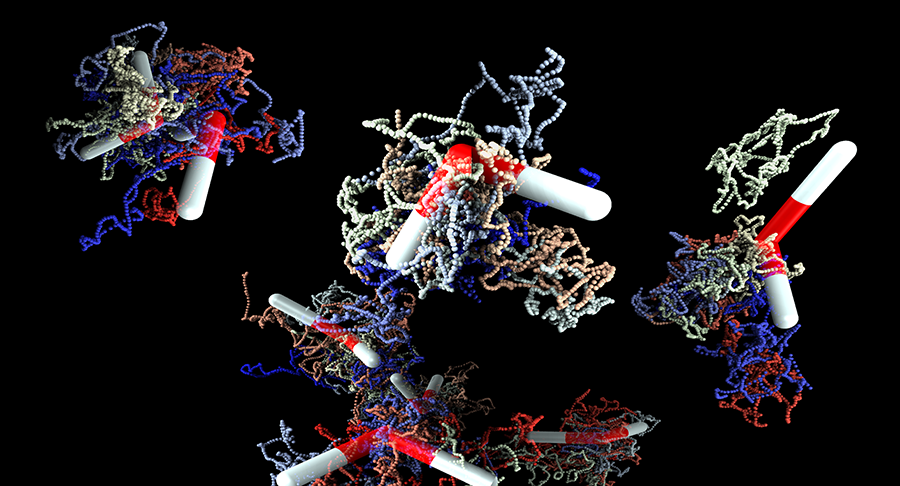

Improved water models will enable us to more effectively simulate the behaviour of proteins, which continually evolve in aqueous environments. The applications being developed in connection with the TREX project could have a significant impact on research in biology and pharmacy. For example, nitrogenases, which make essential contributions to life, transform nitrogen gas into ammonia, a form that can be used by organisms. However, the theoretical description of the physicochemical mechanisms used by this enzyme is not accurate enough under current models. Exascale computing should also improve experts’ understanding of highly correlated materials such as superconductors, which are characterised by the substantial interactions between the electrons they are made of.

"The microscopic understanding of their functioning remains an unresolved issue, one that has nagged scientists ever since the 1980s," Casula points out. "It is one of the major open problems in condensed matter physics. When mastered, these materials will, among other things, be able to transport electricity with no loss of energy." 2D materials are also involved, especially those used in solar panels to convert light into power.

"To model matter using quantum mechanics means relying on equations that become exponentially more complex, such as the Schrödinger equation, whose number of coordinates increases with the system, " Casula adds. "In order to solve them in simulations, we either have to use quantum computers, or further explore the power of silicon analogue chips with exascale computing, along with suitable algorithms."

To achieve this, TREX members are counting on Quantum Monte Carlo (QMC), and developing libraries to integrate it into existing codes. "We are fortunate to have a method that perfectly matches exascale machines," Scemama exclaims. QMC is particularly effective at digitally calculating observable values – the quantum equivalent of classical physical values – bringing into play quantum interactions between multiple particles.

"The full computation of these observables is too complex," Casula stresses. "Accurately estimating them using deterministic methods could take more time than the age of the Universe. Simply put, QMC will not solve everything, but instead provides a statistical sampling of results. Exaflop computers could draw millions of samples per second, and thanks to statistical tools such as the central limit theorem, the more of these values we have, the closer we get to the actual result. We can thus obtain an approximation that is accurate enough to help researchers, all within an acceptable amount of time."

With regard to the study of matter, an exascale machine can provide a good description of the electron cloud and its interaction with nuclei. That is not the only advantage. "When configured properly, these machines may use thirty times more energy than classical supercomputers, but in return will produce a thousand times more computing power," Scemama believes. "Researchers could launch very costly calculations, and use the results to build simpler models for future use."

The TREX team nevertheless insists that above all else, it creates technical and predictive tools for other researchers, who will then seek to develop concrete applications. Ongoing exchanges have made it possible to share best practices and feedback among processor manufacturers, physicists, chemists, researchers in high-performance computing, and TREX's two computing centres.

- 1. In addition to the CNRS, the project includes the universities of Versailles Saint-Quentin-en-Yvelines, Twente (the Netherlands), Vienna (Austria), Lodz (Poland), the SISSA international school of advanced studies (Italy), the Max Planck Institute (Germany), the University of Technology in Bratislava, STU (Slovakia), as well as the CINECA (Italy) and Jülich (Germany) supercomputing centres, the German Megware and French TRUST-IT companies.

- 2. Laboratoire de chimie et physique quantiques (CNRS / Université Toulouse III - Paul Sabatier.

- 3. CNRS / MNHN / Sorbonne Université.

Explore more

Author

A graduate from the School of Journalism in Lille, Martin Koppe has worked for a number of publications including Dossiers d’archéologie, Science et Vie Junior and La Recherche, as well the website Maxisciences.com. He also holds degrees in art history, archaeometry, and epistemology.