You are here

When the cloud gets closer

In the world of distributed systems, where resources such as memory and computing power are divided among different machines, the cloud has experienced extraordinary democratisation over the last fifteen years. However, it is not the only system for remote data processing and storage. “A cloud typically consists of a large data centre, or multiple medium-sized centres, but this system is reaching its limits because it is increasingly difficult to concentrate computing resources,” explains Guillaume Pierre, a professor at Université de Rennes and a member of the IRISA laboratory for research and innovation in digital science and technology.1 “We realised that sending data to long distances for mass processing in large centres was not always the best solution.”

Data processed and stored nearby

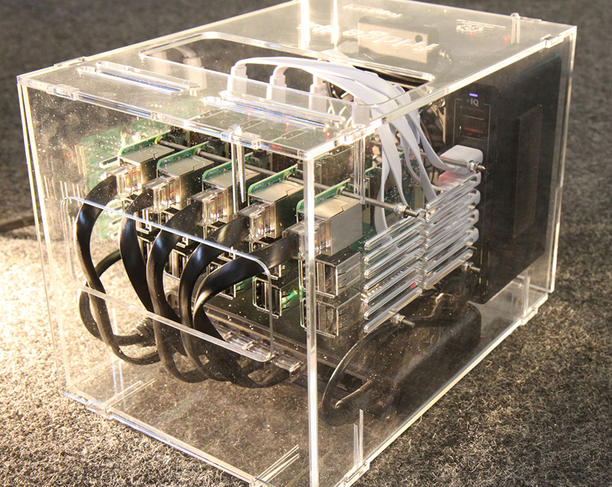

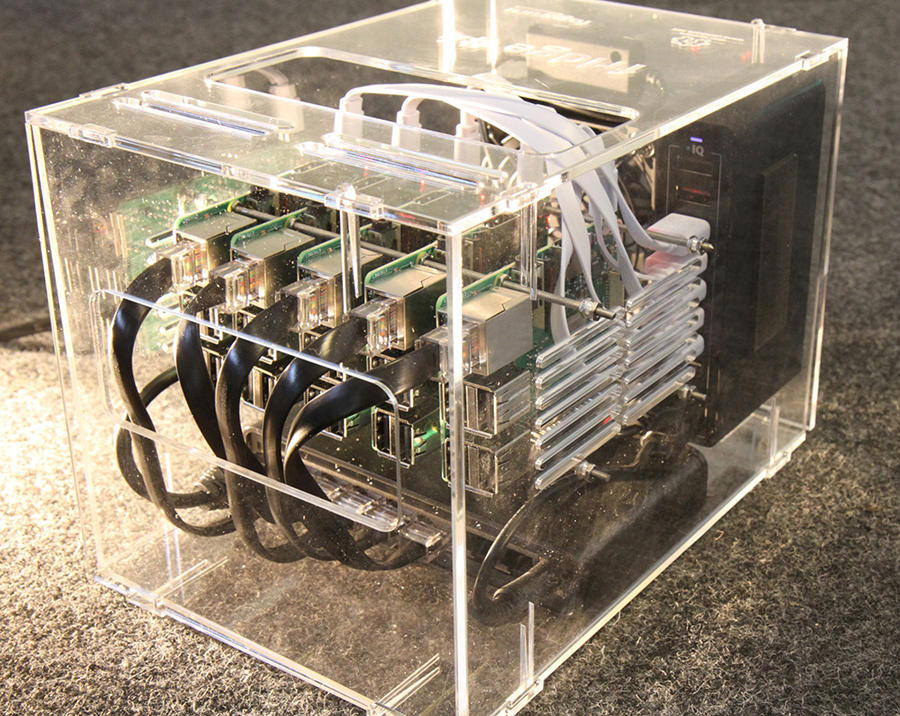

Fog computing provides an answer to these issues. The data is processed using network nodes that are closer to their source, and on a reduced scale. For sensors and connected objects, sending information on a small local server, which will relay it to more powerful machines only if needed, can be sufficient. Relying on sizeable data centres can have pitfalls. Due to legislation, it may be preferable for personal, medical, and military material not to be stored abroad. Distance can also lead to latency, which is not a problem when it comes to checking emails, but can be detrimental to critical or interactive applications ranging from video games to remote surgery.

“The challenge of fog computing is to preserve the cloud’s beneficial properties, such as flexibility and power, while more effectively distributing resources,” affirms Pierre. “However, many models and scenarios designed for the cloud work poorly or not at all with fog computing. We therefore have to reinvent the architectures of these systems.”

For instance, machines are physically very close to one another in data centres, which increases computing reliability and reduces latency in information processing. Their connections via cables are much quicker than the large-scale networks used by fog computing, such as 5G, ADSL, or fibre optics. Managing these dispersed machines also requires specfic optimisation of the network and its flows. If the data is processed in nodes that are closer than a major data centre abroad, but communication between these nodes is poorly optimised, the information can end up travelling over longer distances.

Experimentation in connection with a European project

These research and improvement areas were notably put into practice as part of FogGuru, which Pierre coordinates. “This European doctoral training project enabled eight PhD students to apply their talents to fog computing. The local authorities of Valencia, Spain gave us the keys to the city, as it were, in order to experiment with these technologies.”

Two specific uses were studied. The first involved the distribution of fresh water, an invaluable resource in this semi-arid zone. Two hundred thousand smart meters were installed in private homes to detect leaks, but the results were unsatisfactory. “It took three or four days to identify a problem. With fog computing, we collect and process data up to once per hour, so we can notify technicians within half a day. We developed a meter prototype, but it was not deployed on a large scale due to the (Covid-19) pandemic.”

The second application, which was finalised, involved the Valencia marina. It consisted in installing sensors to measure wind and wave force, make weather readings, keep track of entries and exits from the site, and then use this data to facilitate its management. A hackathon was organised by FogGuru, and was won by a team that designed a mathematical model for the silting up of the marina. “Rather than dredge the entire area, which costs one to two million euros each year, this solution can limit efforts to priority zones covering just 10% of the marina,” enthuses Pierre. “This processing does not require to send data, only of use for the port of Valencia, to a distant server.”

Avenues for reducing energy consumption

Fog computing is also of interest as an alternative to the huge energy consumption of data centres. “Saving energy is generally not the primary reason for preferring fog computing, as this system is, for the time being, less optimised than the cloud,” tempers Pierre. “But in this day and age, we must take this option into consideration. We are therefore studying the energy efficiency of fog computing platforms, although we have not fully explored the subject yet.”

This issue is connected to those close to the heart of Jean-Marc Pierson, a professor at Université de Toulouse, director of the IRIT,2 and a specialist in less energy-hungry distributed systems. He is also a member of GDS EcoInfo, the CNRS service group for environmentally-friendly IT. “The challenge is that fog computing does not replace the cloud, but complements it,” says Pierson. “Overall consumption therefore increases. The system nevertheless requires smaller installations and infrastructure, less cooling, and can be easily powered by renewable energy.3 Studies are needed on the complete lifecycle of various computing centres in order to truly determine which solution consumes less.”

A popular area of research in fog computing and distributed systems in general is scheduling, in other words the optimised sequencing of computing tasks. Reducing the replication of data is also being studied, concerning for instance large and popular files such as videos and music. “In addition, we are looking at user acceptance of lesser service,” Pierson adds. “Slower access and images of poorer quality allow for substantial energy savings. It is almost a philosophical question: is it really useful to have immediate access to these resources whenever we want?”

By putting the emphasis on computing systems that are less powerful and hence less energy-intensive, fog computing could be an answer to approaches seeking greater energy sobriety. Existing systems, however, must be adapted and optimised so that this architecture is fully deployed.

- 1. CNRS / Université de Rennes.

- 2. Institut de recherche en informatique de Toulouse (CNRS / Institut national polytechnique de Toulouse / Université de Toulouse III - Paul Sabatier.

- 3. https://www.irit.fr/datazero/index.php/en/

Explore more

Author

A graduate from the School of Journalism in Lille, Martin Koppe has worked for a number of publications including Dossiers d’archéologie, Science et Vie Junior and La Recherche, as well the website Maxisciences.com. He also holds degrees in art history, archaeometry, and epistemology.