You are here

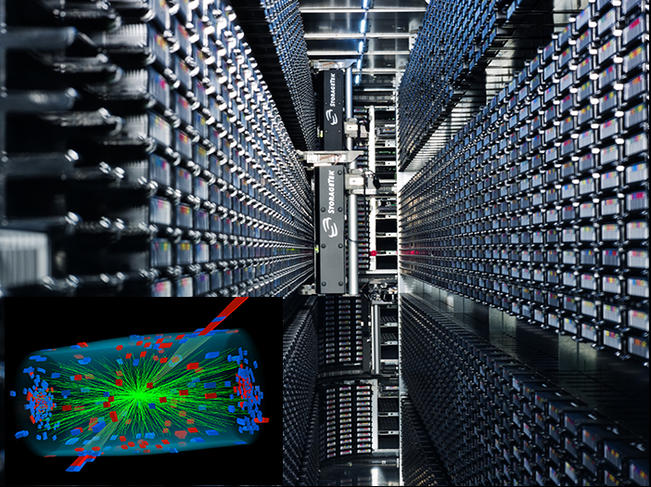

Storing Scientific Data for the Future

Have you heard of the “suitcase project?” It is about a particle-physics research group that wishes to analyze data from a study conducted 20 years earlier, using new software; the data was recorded on old magnetic tapes, stored in an old suitcase abandoned in the corner of some office. There followed the laborious task of recovery, reading and understanding of the data.

Fortunately, the story ends well, with the researchers even discovering a new energy dependence in the area of fundamental forces. Yet the same cannot be said of all research projects that include older data. It is fairly common to come across older media that is inaccessible because the machines that read them no longer exist, or worse still, the data can no longer be found because they were simply discarded once the project was completed and the relevant articles published.

The data community

As analytical instruments and software improve, almost all disciplines have witnessed an explosion in the amount of data created each year. And these data are precious since they have very often been generated by complex and costly studies, in high-energy physics for example, or else they are the result of periodic observations carried out over extremely long periods, such as tracking the position of stellar objects or demographic data.

It was in this setting that in 2012, the interdisciplinary PREDON project was created, spearheaded by Cristinel Diaconu, research director at the CNRS:1 “The explosion in the volume of data from experiments carried out at CERN made us reflect on their long-term preservation. Our community was initially built around an international organization named Data Preservation and Long-Term Analysis in High-Energy Physics. We subsequently discovered that many disciplines had the same issue, and this finding led us to the idea of forming an interdisciplinary community to examine the question of scientific data preservation.”

“How to ensure long-term data storage? How to ensure that data will be retrievable 10 or 20 years from now? How to ensure that the next generation of researchers will be able to understand currently archived data?” Within this forum, participants exchanged views on such questions to adapt storage strategies to their own sectors.

These questions have taken on even greater significance following the request of a Data Management Plan in the framework of a pilot project in the EU research and innovation program, Horizon 2020, and with this requirement set to become widespread.

Astronomy, a data preservation pioneer

The key pioneering sector in data preservation and sharing is without doubt that of astronomy. Structured catalogues began to appear in the 19th century as scientists amassed greater quantities of observational data essential to understand the sky and its evolution. Data sharing is thus crucial for research.

“To understand the physical phenomena at play in astronomy, we need to collate observations made using various techniques and work on data obtained by other instruments and other teams,” explains Françoise Genova, researcher at the CDS.2

To meet this need for data exchange and preservation, the international astronomy community set up the Virtual Observatory, a group of services that lets researchers draw from all available open-access astronomical data using a large index and shared standards.

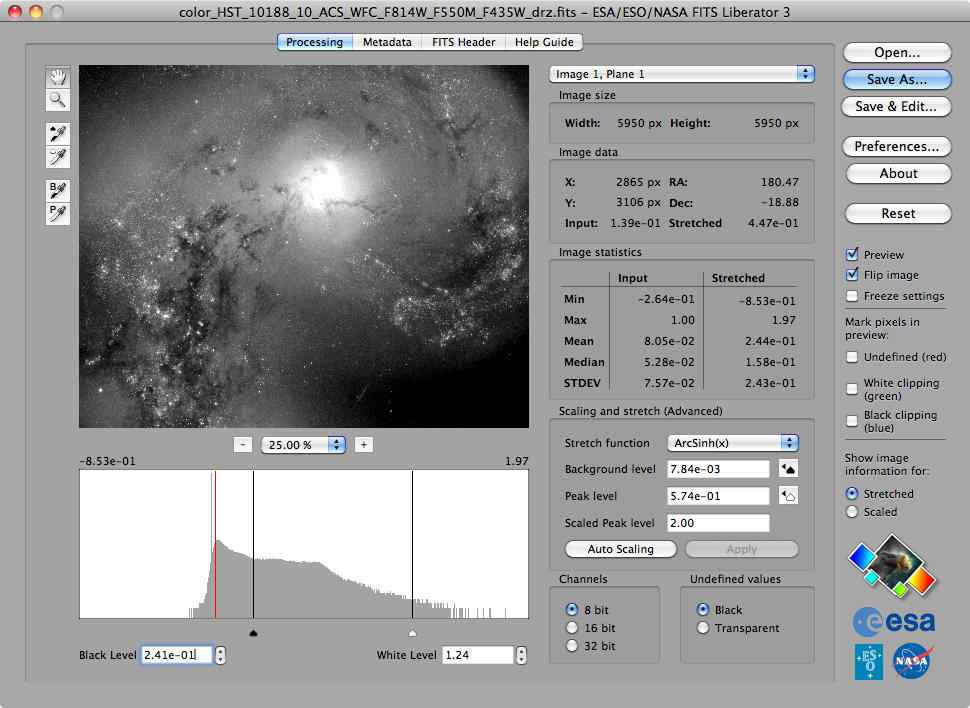

“At CDS, the preservation strategy is to store data in sustainable formats and label them using metadata recognized across the discipline. These metadata may either be present in the documents we receive (FITS, i.e. Flexible Image Transport System files) or added later by a CDS librarian,” says CDS engineer Gilles Landais. These data are then duplicated at eight mirror sites located in different countries.

Documenting data

Metadata (the “labels” that describe the content of the data itself) play a key role in all efforts at long-term data preservation: they allow rapid recovery and reliable usage of results collected years earlier by different teams using different instruments. In photography for example, metadata provide information about the type of camera used, the different settings for each shot, and occasionally, geolocation coordinates for GPS-enabled cameras.

Thus, in addition to the problems associated with the material storage and selection of data, there is the issue of rendering such data intelligible (and usable) upon receipt. “We carry out extensive work on the quality of data to ensure it can be reused. For example, data must incorporate contextual information such as the system of coordinates used to calculate a given position or details of the instrument with which the observation in question was made, and so on,” explains Genova. “If this information is missing, our librarians will attempt to track it down. This approach lends far greater relevance to data and enables precise retrieval to be performed.”

The issues of data storage and sharing are considered from the outset in large-scale projects. The latest example has to do with the Gaia observation mission, whose objective is to map over a billion celestial objects. “In light of the huge volume of data involved, the project simulated catalogues and drew up a plan for data preservation and retrieval by the community before the mission was even launched,” adds Genova.

Such good practices are naturally in place for PREDON. “Above all, we explained how we use metadata,” states Landais. “This information is crucial for data preservation, as various disciplines are faced with a number of shared difficulties.” Sharing information about data storage methods is especially needed in fields that have seen a massive increase in the amount of data they handle, like crystallography or genetics.

Standardizing formats

There is only one step from good practice within a research unit, to global strategy considerations. In France, the dissemination and storage of numerical data within the human and social sciences are overseen by a Very Large Research Infrastructure (TGIR), the TGIR Huma-Num.3 This service is provided in partnership with Cines,4 which provides the requisite archiving software and expertise.

“This partnership offers a number of advantages,” suggests Marion Massol, who heads the archival department at Cines. “First, the TGIR manages priorities between the different projects and assists laboratories with their data archival. This then allows us to assist producers in the design and development of software for data generation and organization within Cines facilities. The process is practically transparent for the researchers.”

Since 2006, Cines has managed long-term archival of data from all disciplines on a voluntary basis. “We try to adapt to the abilities of the person submitting data. The process is both customized and shared.”

In addition to the technical aspects of data storage, Cines pursues lobbying actions with the market players to ensure that their file formats will remain machine readable over the years to come. A team of engineers is engaged in an ongoing race against obsolescence to guarantee that software or reading equipment capable of accessing the data remains available. The FACILE platform keeps track of the range of formats currently accepted by Cines.

"We share these good practices within PREDON to help scientific communities in organizing and defining standard formats according to their special feature,” Massol explains.

Among the communities who started promoting this openness, researchers carrying out climatic simulations for the Intergovernmental Panel on Climate Change (IPCC) were faced with the question of allocating experiments to different laboratories and countries. “The constraints imposed by re-harmonizing results for comparison and sharing forced the climatologists to agree on the use of standard data and metadata formats,” she adds.

Keeping down costs

The question of metadata is a central preoccupation of PREDON’s Diaconu: “Carrying out any kind of procedure on data without knowing its type or structure is doomed to fail. Everything must be documented. Metadata are normally the weak link: everyone knows their own procedures by heart and they feel that there is no need to record an adequate description. But when these people move elsewhere, the data entered is lost. Today, we are unable to store complex data that belongs to closed communities in such a way as to allow them to be reused in the future.”

The issue of survival of data beyond the existence of the particular community that produced them is therefore important. The universal response is to make such data public and open, and to work on the formats to ensure that they remain intelligible, easy to read and long-lasting.

For Diaconu, however, data loss appears to be a common fate. “When we wish to access data, either we cannot find them, or we can find them but we don’t know what to do because we don’t know what we are dealing with. Worse, some data are destroyed by researchers who feel it is no longer of any use after the completion of the related project. At the time, we may not appreciate it, but 10 years later, an ongoing project may overlap with a previous project; sadly, the potential for discovery may be lost since there is no longer any funding to carry out the necessary procedures.”

And archived data represents a treasure trove. Diaconu calculated the value of such data in his field: “My team and I realized that the additional cost of storing data represents about 1/1 000 of the global budget. Thus, publication of new articles based on use of archives in the ensuing 5 years represents a profit of 10%. We basically have research that costs almost nothing. Without any data storage strategy, we completely miss out on potential discoveries and low-cost research. Once data has been properly stored, however, its cost is practically zero.”

Explore more

Author

Holding degrees from both the Bordeaux Institute of Journalism and the Efet photography school, Guillaume Garvanèse is a journalist and photographer, specialized in health and social issues. He has worked for the French group Moniteur.