You are here

Computers: Promises of the Quantum Dawn

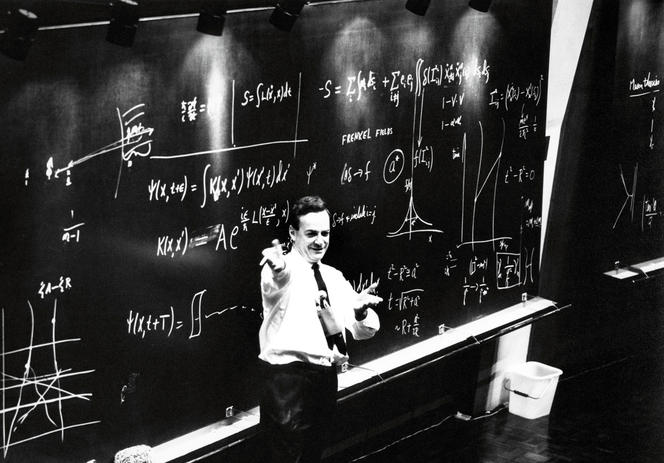

The world's most powerful supercomputers could soon be relegated to the prehistory of computing. For the most optimistic, the next few years will see the arrival of a new kind of machine known as quantum computer, offering phenomenal computing capacities. Imagined during the early 1980s by the winner of the Nobel Prize in physics, Richard Feynman, the concept of such a computer is today increasingly becoming a reality. "We are currently experiencing a pivotal period in which industrial actors like Google and IBM have seized upon the subject, which had previously been the domain of research laboratories. This will help us overcome major technological issues," enthuses Tristan Meunier of the Institut Néel.1 It's the same story for Eleni Diamanti of the Laboratoire d'informatique de Paris 6.2 "In the coming years we will have quantum computers that perform well enough to beat traditional computers on certain problems."

The power of superposition and entanglement

As its name suggests, a quantum computer uses the laws of quantum mechanics, a theory that describes physical phenomena on the atomic scale. These striking laws allow a particle, such as an atom or a molecule, to be in different states at the same time—referred to as the superposition of states. On an ordinary computer, information is coded in the form of bits that can have one of only two values, 0 or 1, whereas quantum bits (or qubits) can simultaneously take the values of 0 and 1, depending on whether an electrical current passes through a transistor. What's more, when two qubits interact, their physical states are "entangled," such that the two systems can no longer be described independently, which is referred to as entangled states.

Thanks to the phenomena of superposition and entanglement, a quantum computer can in theory have access to all of the possible results of a calculation in a single step, whereas a traditional computer would process the information consecutively, one result after another. It is this massive parallelism that is central to the quantum computer's power.

The computation speed of quantum algorithms

In the 1990s, researchers proposed algorithms for such computers, and mathematically demonstrated that when implemented on such machines, they would effectively perform certain calculations at speeds unimaginable using a classical computer. In 1994, the American mathematician Peter Shor of MIT presented an algorithm that could factorize any number, which is to say decompose it into a product of prime numbers, doing so in record time.

This would be enough to break most of today's cryptographic systems, from the encryption of bank transactions to the coding used to exchange state secrets, which precisely rely on the tremendous amount of time it takes to calculate the factorization of increasingly large numbers. A problem of this kind, which would take a classical computer a few billion years, would be resolved by a quantum computer in a matter of minutes!

Similarly, in 1997, Lov Grover from Bell Labs demonstrated with his algorithm that a quantum computer could considerably increase the effectiveness of the classical algorithms used to search for information in a database.

For example, the search for one item among ten thousand elements of data would only require approximately one hundred steps, as opposed to ten thousand for a traditional computer. The time it takes to process massive amounts of data would be considerably reduced, hence the interest of companies such as Google in this new technology.

The accumulation of qubits

What was left was to develop the building blocks of these quantum computers, the famous qubits, able to be in two states simultaneously. Physicists across the globe quickly got to work. Many candidates were tested, including atoms, ions, molecules, electrons, photons, and superconducting circuits. There have already been notable successes in the manipulation of these qubits. For example in 2003, Rainer Blatt from the University of Innsbruck in Austria created the first two-bit logic gate using calcium ions, a key device for performing operations through the coupling of qubits.

As for the greatest calculation exploit, it was achieved in 2012 by a team at the University of Bristol in England, which successfully used a photonic device to factorize the number 21, which is to say to show that it breaks down into 3 times 7. The performance was of course modest, but it represents a demonstration of the principle of Shor's algorithm, whose power could be used for much larger numbers.

For as we have seen, the advantage of quantum calculation over its classical equivalent is all the greater if the quantity of information being processed is large. In other words, "in order to perform well and be of interest, a quantum computer must include a large number of qubits. For factorization problems, a thousand must be coupled at the very least," points out Simon Perdrix of the Laboratoire lorrain de recherche en informatique et ses applications.3

The obstacle of decoherence

And that is precisely the problem. "In order for a quantum computer to function, its qubits must keep these quantum properties during the calculation. Yet interactions with the environment (magnetic field, light, thermal agitation...) can all cause a quantum system to lose its properties. And this is all the more true given the number of qubits contained in the system," explains Sébastien Tanzilli of the Institut de physique de Nice,4 who is in charge of quantum technologies for the CNRS, and represents France within the Quantum Community Network, the committee tasked with steering the European initiative Quantum Technologies Flagship. This phenomenon, referred to as decoherence, represents the primary obstacle in the construction of these computers.

Yet far from being discouraged, physicists tried from the beginning to better understand the process, and did everything they could to control it. As a result, since the development of the first qubits nearly 25 years ago, their coherence time has continued to increase, and an ever-larger number of qubits have been successfully entangled. "Advances in the conception and manipulation of qubits have surely but slowly expanded the number of qubits, with nobody being able to say where this limit is located," observes Meunier. Currently, the record number of entangled qubits is 20. It is true that in 2018 Google announced that it had produced a quantum processor made up of 72 qubits, but without demonstrating how many of them were effectively entangled.

Promising implementations

In this race for the greatest number of qubits and the creation of a quantum computer, two systems, currently neck and neck, offer the most interesting prospects. The first involves trapped ions. Developed in the early 1990s, these are atoms—especially calcium—that have had one or more electrons removed, and that are then trapped in a vacuum using lasers. They hold the record for coherence time, which has reached multiple minutes in certain devices. The disadvantage is that they are slow to manipulate, which slows down calculations. Another drawback is that "trapping techniques are relatively complicated to implement, such that it is difficult to see how it's possible to increase size and reach 1000 qubits," notes Tanzilli. Some have already imagined solutions for doing so, although this remains a major challenge.

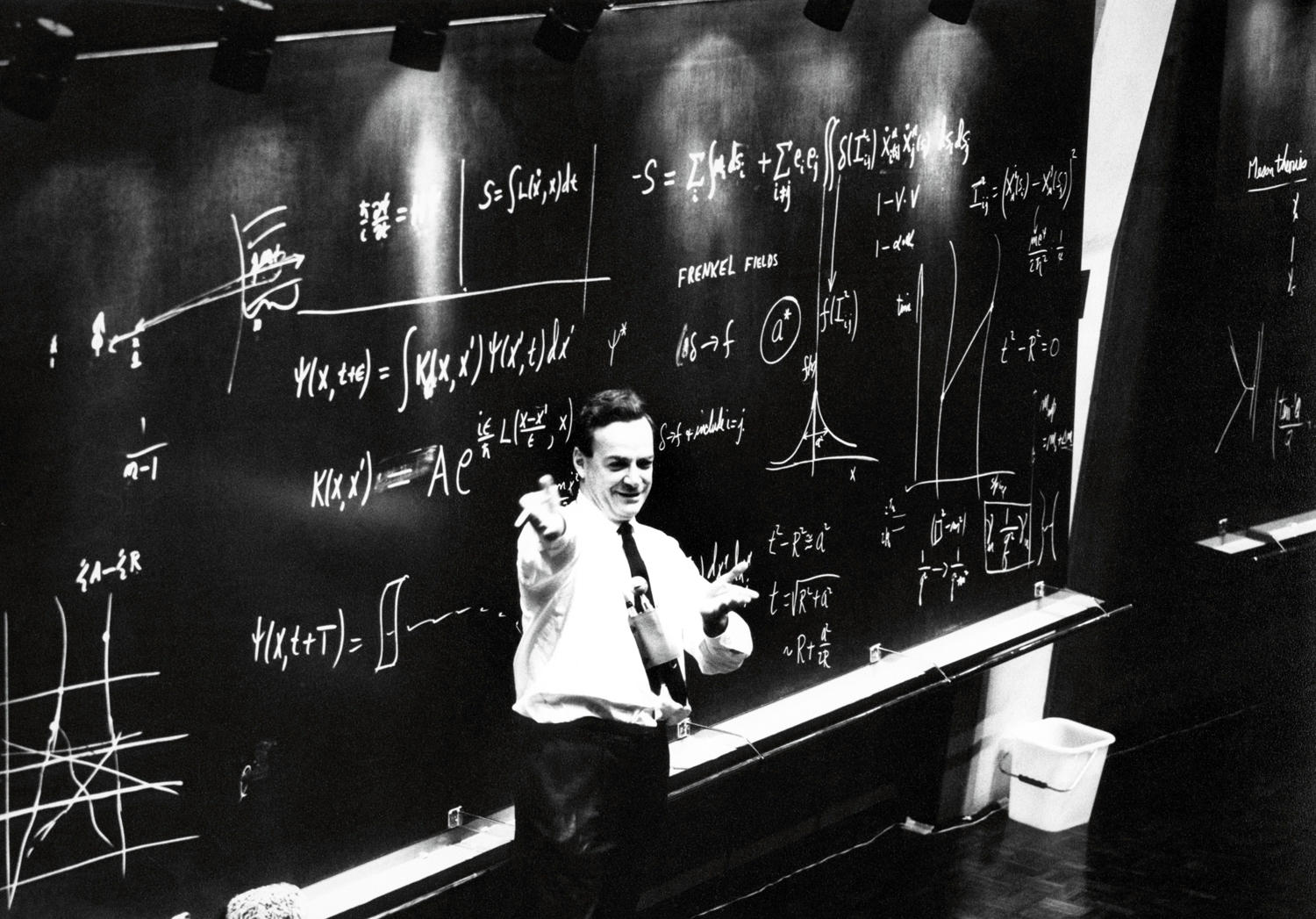

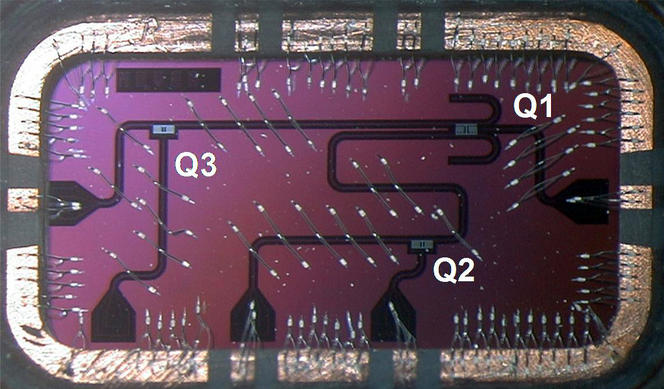

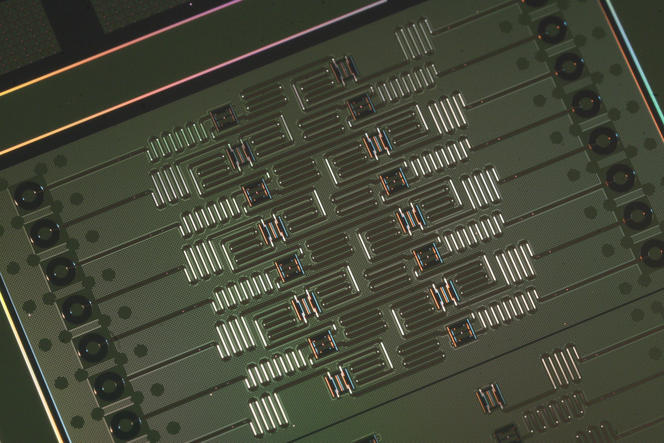

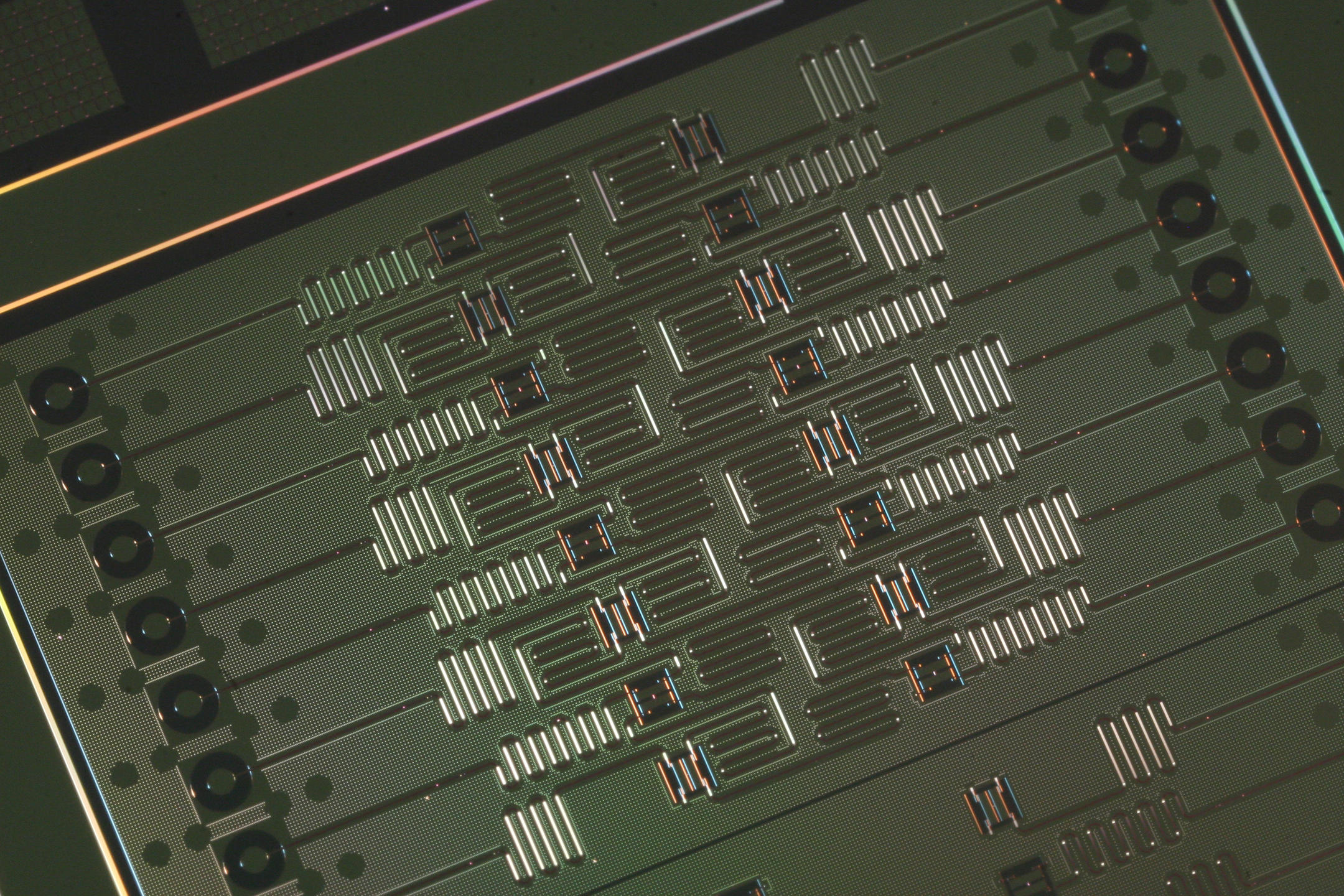

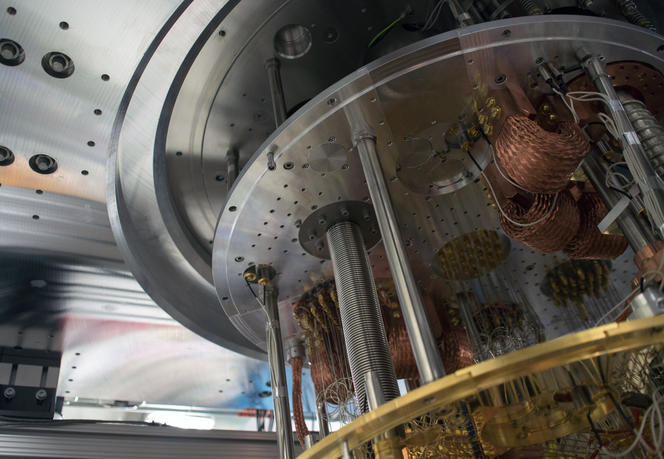

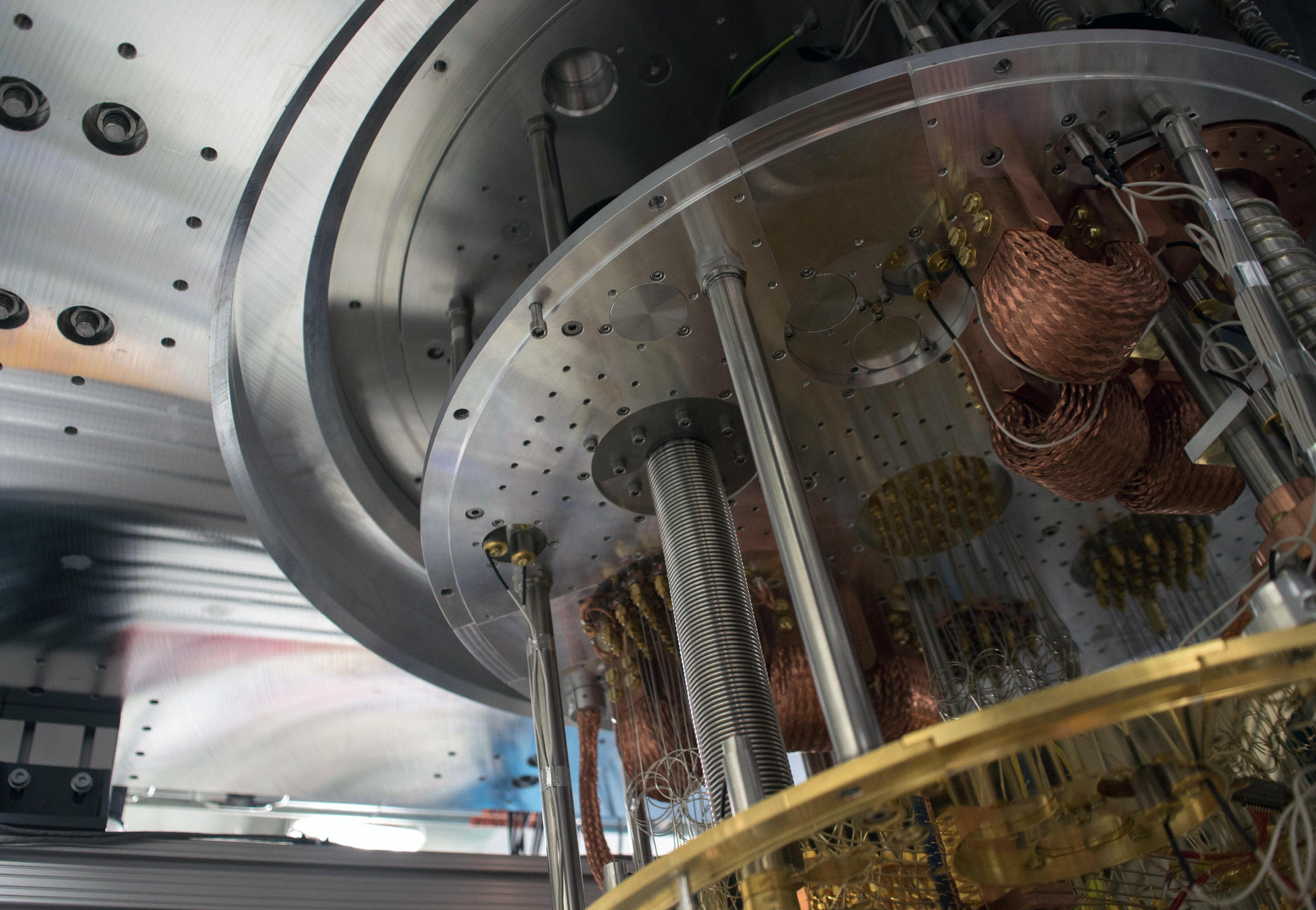

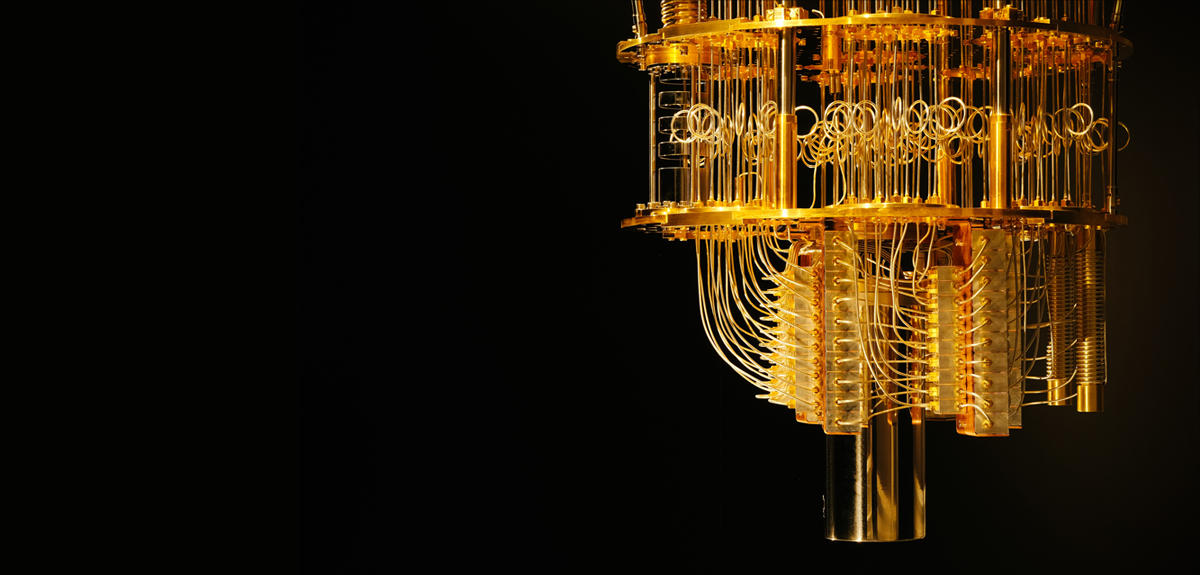

The second favourite is superconducting circuits. These micrometric-sized electrical circuits appeared in the late 1990s, and include metallic electrodes that become superconducting—which is to say they conduct electricity without resistance—at very low temperature. Thanks to ultra-thin barriers between these electrodes, known as Josephson junctions, these circuits behave like artificial atoms whose quantum state can be manipulated. With regard to decoherence, superconducting qubits are less effective than trapped ions, but they are faster to manipulate.

For Patrice Bertet of the Service de physique de l’état condensé au CEA Saclay,5 the laboratory that played a pioneering role in the development of these systems, they offer an additional advantage: "The production technique is fairly simple, which makes it easy to duplicate and integrate a large number of them on the same chip." It explains why these devices are so popular with industry. IBM and Google notably chose this option for their machines.

More recently, a third outsider has joined the race, namely electron spins in silicon, which involve isolating electrons in a silicon matrix and using their spin—or the particle's rotation around itself—as a quantum bit of information. Developed over the last five years, these qubits are still relatively "fragile," and only two of them have been successfully entangled to date. However, it is generally agreed that they will soon achieve the same levels of performance as the two pioneering devices. In particular, "they are the only ones that can be integrated on a very large scale. Their production uses exactly the same techniques as those of micro and nanoelectronics, which we have a good command of, and subsequently enables their extreme miniaturization," Meunier adds. He is enthusiastic about this technology's potential, and is one of the leaders of the QuCube project being conducted in partnership with two other laboratories,6 whose objective is to develop a silicon-based processor that includes 100 qubits within the next six years.

So which of these three candidates will win out, and lead to the creation of the first quantum computer? "It's impossible to say, as each device offers certain advantages that the others do not have. None of them can today presume to come out on top," believes Tanzilli.

Essential error correction

One thing is certain, and that is improving qubit performance will not be enough. To make the quantum computer a reality, it is also important to correct calculation errors resulting from decoherence. Mathematicians and computer scientists quickly understood this, and developed correcting codes, the quantum equivalent of the error-correcting algorithms used in our computers. It has even been demonstrated that in theory, if a qubit's error rate is below a certain value, it is possible to correct errors faster than they occur. "The idea of correcting codes was a small revolution in the field. Even the most pessimistic began to believe in the possibility of a quantum computer," admits Tanzilli.

The principle of correcting codes is simple. They use a group of multiple "physical" qubits to code the information of a single "logical" qubit. By measuring the properties of physical qubits, we can determine—thanks to entanglement—whether the logical qubit is no longer in the desired state, and correct it immediately. However, their implementation is more complicated in practice, as it is believed that it would take 1,000—and even 10,000—physical qubits for each logical qubit that can be used for calculation. In other words, the ideal quantum computer should include not just a few thousand qubits, but a few million! "The manipulation and control of such a large number of qubits remains largely beyond reach," warns Patrice Bertet. This has not prevented physicists from testing these methods on a small number of qubits.

Eleni Diamanti recognizes that a theoretical breakthrough is needed to increase the performance of these codes and reduce the number of qubits they require. "Only then will we have a quantum computer worthy of the name. Computer scientists, mathematicians, and physicists are working on this together, and I am convinced that they will one day solve this problem."

Toward quantum micro-calculators?

Not everyone wants to wait for the emergence of these universal quantum computers, which when equipped with the best qubits and high-performance correcting codes, will be able to perform any complex calculation. "The current trend, which has led to an enormous amount of research, is to identify which problems, using which algorithms, can be solved by near-term computers with fewer qubits and no error-correcting system," notes Iordanis Kerenidis, of l’Institut de recherche en informatique fondamentale,7 and director of the Paris Centre for Quantum Computing.

The first step toward this objective will be to demonstrate quantum supremacy, in other words to experimentally prove for a given algorithm the advantage of the quantum over the classic. Specialists believe that this milestone should be achieved in just five years, with the appearance of small quantum calculators containing 50 to 100 qubits. Researchers are preparing for this, and have already identified a type of mathematical problem—probability distribution calculations—that lends itself to such a demonstration. "Its resolution will certainly not be of practical utility, but it will launch the use of quantum machines for problems that are worthy of interest," posits Kerenidis.

From quantum calculation to simulation

The fields of chemistry and materials science should be the first to benefit. There are predictions that with machines of a hundred qubits, the synthesis of new molecules or the development of materials with unprecedented properties will be largely accelerated. How? By using quantum computers for simulation rather than calculation. The idea, which was originally suggested by Richard Feynman, is to imitate complex physical systems (molecules, materials, etc.) with the help of simpler artificial quantum systems, namely qubits. By varying parameters at will (distance of atoms, force of interactions...), which are not adjustable in real systems, we can model their dynamics and thereby understand them better.

Quantum simulation has already yielded results, but with a greater number of qubits it promises even more spectacular advances. "The advantage of simulation is that decoherence is ultimately no longer an enemy, because the systems that are simulated are themselves subject to this phenomenon. Thus there is no need to have perfect quantum computers," stresses Simon Perdrix.

With computers of a few hundred qubits, numerous other applications could be created. Firstly, optimization tasks could be made much more efficient thanks to quantum calculation, with numerous sectors standing to benefit, from the management of road traffic to energy transportation networking and financial prediction.

A revolution for machine learning?

The acceleration of calculation speed would also have major repercussions in machine learning, a very fashionable technique in artificial intelligence that is used to analyse and sort data in very large digital databases. Once again there will be numerous applications ranging from improved web search engines to much more precise medical diagnostics, to cite just two. "In both optimization and machine learning, we are searching not for exact solutions, but to provide answers that are satisfactory. We can therefore tolerate errors much more than in a factorization problem, for example. That is why the use of even near-term quantum computers will contribute so much," emphasizes Kerenidis.

It is not by chance that the field of quantum algorithmics has never been as active as it is today. A single example: in 2017, Kerenidis presented a machine learning algorithm that can, in theory, recommend films, books, or matches that are exponentially more effective than using current methods. Who knows when an actual quantum computer will be created, and whether it will indeed become a reality, but along the path toward its creation, the prospects for the average person are extremely tantalizing.