You are here

The challenges of frugal AI

The increasing popularity of artificial intelligence, especially via conversational agents such as ChatGPT, has only expanded the global energy footprint of digital technology. The search for more frugal AI is drawing the attention of scientists, who are well aware of this crucial issue in today’s climate context. But first and foremost, what is the actual share of AI?

“Since digital technology is everywhere, it is complicated to evaluate its impact,” states Denis Trystram, a professor at the polytechnic institute of Grenoble, in southeastern France, and a member of the LIG1. “For example, should the consumption of Tesla vehicles be counted under the transportation or digital category? Should the energy used to purchase tickets online be included in the balance sheet for rail transportation or the Internet? Overall, it is estimated that digital technology represents 4-5% of global energy demand. This figure is nevertheless destined to rise ever more rapidly, especially due to the development of AI. Digital already consumes more than civil aviation, and could equal the consumption of the entire transportation sector by 2025. Data centres alone use 1% of the planet’s electricity.”

A solution for local data processing

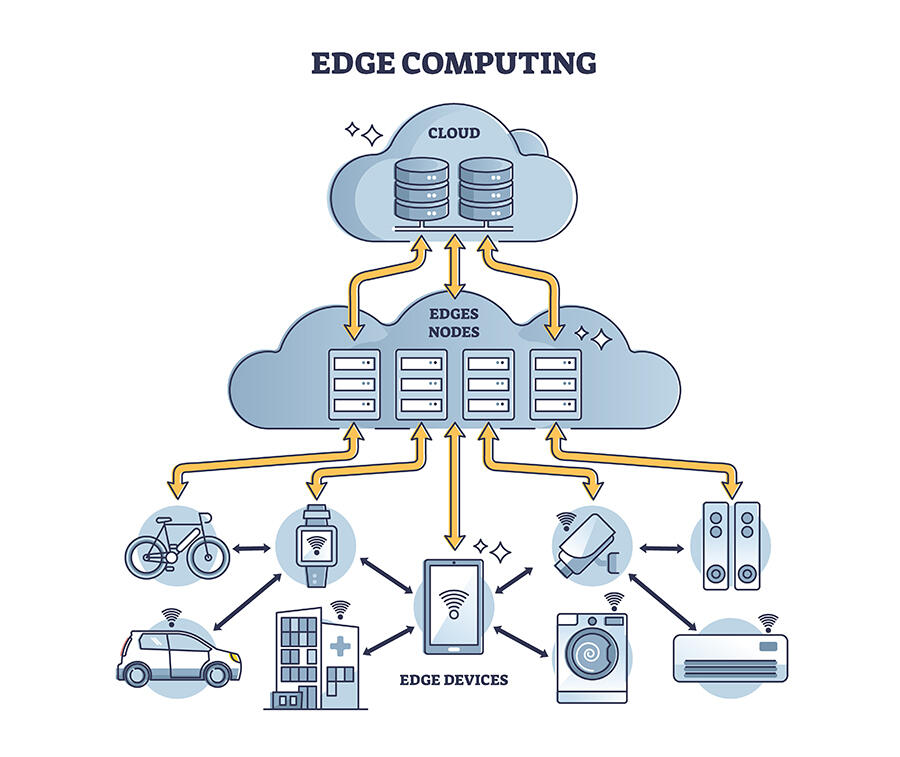

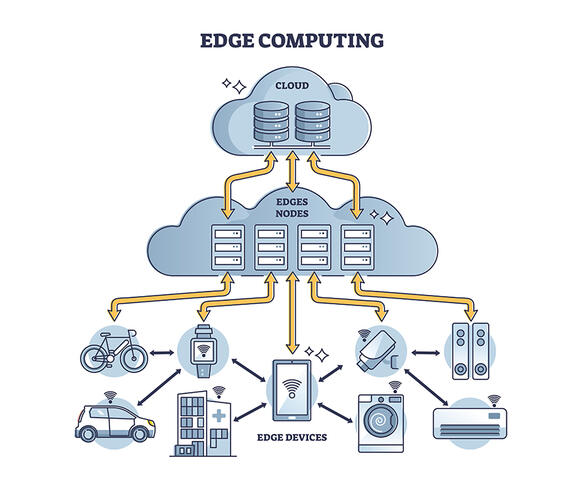

Trystram, who is also the holder of a chair at the Grenoble Alpes Multidisciplinary Institute in Artificial Intelligence (MIAI), has long worked on the optimisation of distributed systems, which is to say mobile device networks such as the Internet of Things (IoT). Initially conducted on computing performance, his research gradually evolved towards reducing the energy consumption and environmental impact of digital technology and machine learning. He focused on the concept of edge computing, especially via the Edge Intelligence2 research programme.

Unlike conventional systems, in which data is centralised and exploited in a limited number of powerful servers, edge computing offers data storage and processing that is as close as possible to where the intelligence was produced, thereby reducing the circulation of large masses of information. While these connected objects are much less powerful than data centres, they are most importantly less costly and less energy-intensive. They of course cannot be used to train complex AI models, but they can run algorithms that are already operational.

“With Edge Intelligence, we identify the scenarios in which local processing is a more frugal option than centralising everything,” Trystram adds. “I also produce tools for measuring AI consumption, in order to inform users.” It is important to keep in mind that most of the “data used on the Internet is not generated within data centres, but instead comes from cameras, computers, and mobile phones. It is therefore sensible to use it as close as possible to the machine that generates it, with a view to relieving networks, and avoiding the transmission of information that we are not yet certain will be used.”

In the absence of a miracle technological solution that will eliminate the energy demand of digital technology in one swoop, fostering a sense of responsibility among users is seen as essential. This requires determining the true cost of practices that are common today, such as watching a video in high definition on a mobile phone.

Trystram is also collaborating with philosophers, sociologists, and experimental economists. Their tools, which emerged from the human and social sciences, help to see things more clearly and to analyse problems from an overall perspective. “There are a number of possible reactions to the impact of digital technology on the climate crisis. The easiest, that of the Internet giants, is business as usual, all while affirming that data centres are greener. However, zero carbon is never attained if all material parameters are taken into consideration. Performance optimisation can reduce the energy impact of major digital platforms by up to 30-40%, but these gains are offset by expanding uses. To go further, it is necessary to call the entire model into question as well as examine behaviour, and determine what practices are truly necessary.”

Genuine room for improvement

This opinion is shared by Gilles Sassatelli, a research professor at the Laboratory of Computer Science, Robotics, and Microelectronics of Montpellier (LIRMM)3, where he works on AI for embedded systems as well as relying on renewable energy to power vast computing systems. He also exploits the physical properties of materials to obtain electronic components that can complete functions now exclusively performed with digital technology, in order to free AI of certain computing tasks. Like Trystram, he studies edge computing. “We should not delude ourselves, AI is seen as a driver of economic growth by many sectors of activity,” he says. “As it currently stands, all of the scientific progress in terms of energy efficiency for AI will be offset by a rebound effect.” Instead of being seen as permanent, these savings are viewed as an opportunity to expand the use of digital tools.

Humanity remains fixed on the notion that the bigger a model, the better. Yet this is a very inefficient way of proceeding. There is a great deal of room for improvement in terms of efficiency, although researchers still have not found the scientific keys to unlock this potential. For instance, significant savings could be secured by identifying where the accuracy of computing in neural networks can be drastically reduced, but this is just a first step.

Various systems on the frontiers of neuroscience, mathematics, and fundamental physics offer interesting prospects. The human brain shows that there is tremendous room for progress, as all of these tasks can be performed with just about 10 watts, less than the energy needed for a bedside lamp.

“For now, none of these ‘emerging’ models can scale-up and compete with the conventional AI in production,” Sassatelli tones down. “The same problem limits the use of renewable energy in data centres, whose ‘neural’ architecture is not optimal; rethinking it by drawing inspiration from edge computing can open pathways towards more virtuous solutions, with digital technology that is closer to humans and their uses, as well as more responsible. The issue is ultimately more societal than scientific: what role do we want AI to have in future societies? We already see regulations being discussed in Europe and the United States. A national and international community of researchers is forming around sustainable digital technology, but we nevertheless must get more involved in these issues.” ♦

- 1. Laboratoire d’Informatique de Grenoble (CNRS / Université Grenoble Alpes).

- 2. https://edge-intelligence.imag.fr/

- 3. CNRS / Université de Montpellier.

Explore more

Author

A graduate from the School of Journalism in Lille, Martin Koppe has worked for a number of publications including Dossiers d’archéologie, Science et Vie Junior and La Recherche, as well the website Maxisciences.com. He also holds degrees in art history, archaeometry, and epistemology.