You are here

How social networks manipulate public opinion

The Politoscope, which you oversee, offers a different perspective on the presidential campaign now underway in France. What is the purpose of this tool, launched in 2016 at the ISC-PIF institute of complex systems and successfully deployed during the previous election?

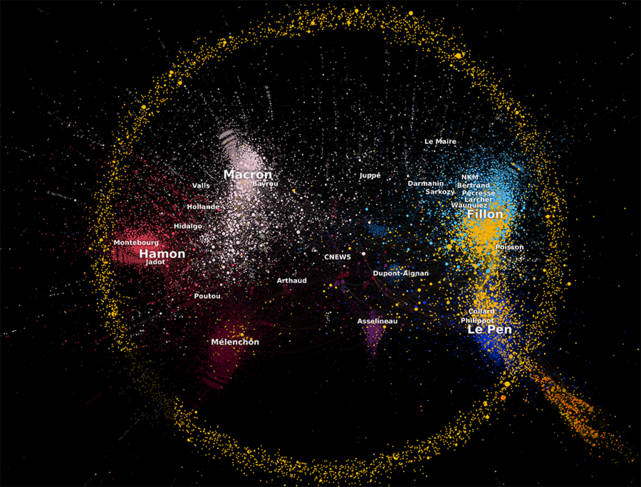

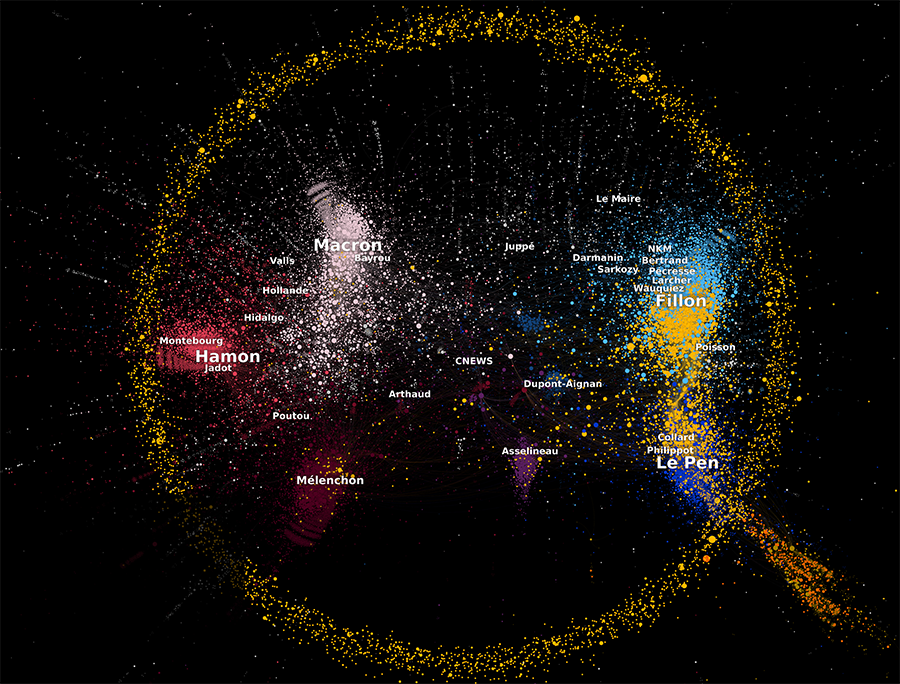

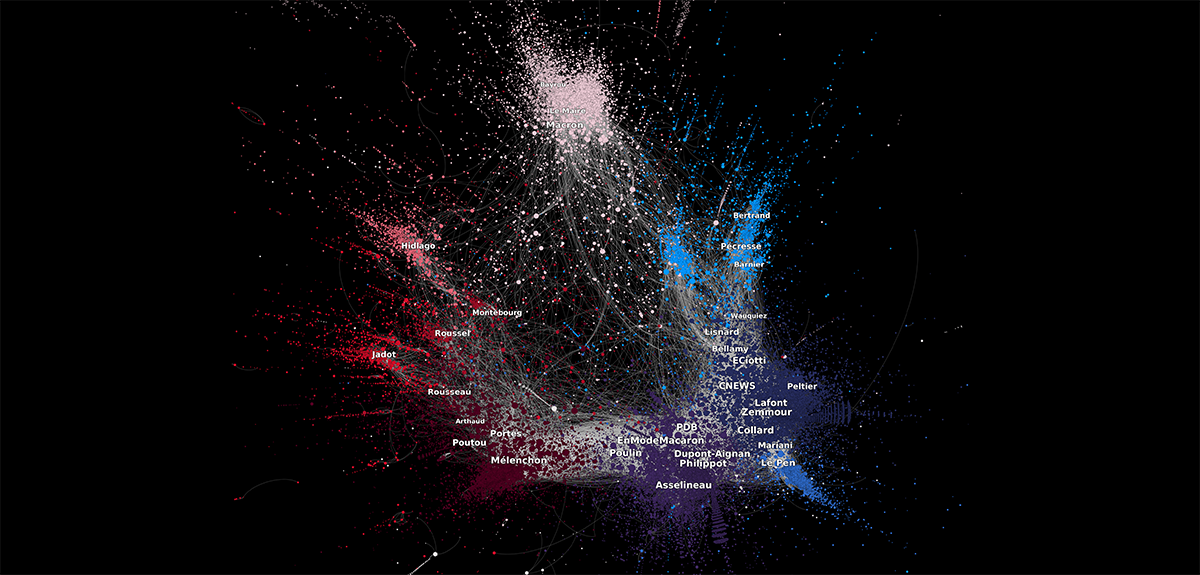

David Chavalarias:1 The purpose of the Politoscope is to offer the general public an insightful look at the masses of data generated on the social networks in the run-up to the presidential election, using analytical tools and methods developed by research. The goal is, for one thing, to help put politicians’ statements into clearer context over the long term, and in the short run, to identify collective actions devised to influence public opinion. The project studies all social networks but focuses primarily on Twitter, a medium that is widely used by political figures and their activist bases. It monitors approximately 3,000 elected politicians and party officials, in addition to specific keywords like #présidentielle2022 or #régionales, scanning 500,000 to 2 million tweets every day. It provides a perspective on the evolution of social groups and alliances between political parties, as well as the information they disseminate.

What different types of opinion manipulation have you identified?

D. C.: In 2017 we observed three types of manipulation. The most conventional consists in spreading disinformation to influence the vote, most often by denigrating a candidate. At the other end of the spectrum are operations that reveal personal information or internal data concerning a political party just before the ballot, leaving no time to verify the accusations, in the hope of instilling doubt and inducing a segment of the electorate to change their vote. I’m thinking in particular of the ‘Macron Leaks’, which were published on the eve of the second round of voting in 2017.

The third type of manipulation consists in multiplying online messages containing biased information. These operations are usually carried out anonymously, or under a misleading identity. For example, in 2017 a number of messages hostile to the candidates Benoît Hamon, Jean-Luc Mélenchon or Emmanuel Macron, using language typical of the political communities supporting François Fillon or Mélenchon, were actually written by foreigners, presumably members of the American far right whom we were able to monitor on the forum 4chan. For important elections, these communities are mobilised online to promote the agenda of this new globalised extreme right.

How does this kind of online manipulation work?

D. C.: Expanding an online presence, through accounts managed by robots or humans, gives the impression that more people support or reject a given idea than is actually the case.

When we are undecided, we have a natural tendency to look at what others are thinking to form our own opinion. Suggesting that many people around you are supporting a given cause increases the probability that you will endorse it yourself. In addition, the artificial amplification of online activity makes it possible to manipulate the digital platforms’ recommendation algorithms, which then spread your content well beyond the circle of users who already share your ideas.

Reversing the opinion of 10% of users can be enough to achieve the desired effect, given that 10% of the French population on Twitter represents 900,000 people. That’s more than the margin of votes between Marine Le Pen and Jean-Luc Mélenchon or François Fillon in the first round in 2017. How does this digital technology break the social fabric?

D. C.: Social networks have been in use for less than 20 years, so we don’t have much perspective on the impact they have on the organisation of social life at the scale of a country. All the information in the online world is controlled by platforms like Facebook and Twitter, digital environments that monitor your actions and those of others in order to decide what to show you. We know that there is a bias that makes people favour more stressful information. For example, when a vaccine is discovered, if one headline asserts that it’s 90% effective and another claims that it causes sterility, most users will read the latter type of message first.

This can lead to the formation of ‘echo chambers’ – groups of people who interact closely and end up thinking the same thing, even if it’s actually ‘alternative facts’. In late 2021, the whistle-blower Frances Haugen revealed that Facebook had steered more than 80% of the Covid-related disinformation to fewer than 4% of its users. In this way, segments of the population can become isolated, with a single view of the world that can be completely wrong. This in turn can result in the balkanisation of public opinion, characterised by excessive hostility between groups. This phenomenon reached its peak in the United States, where extreme bipolarisation culminated in the invasion of the Capitol building on 6 January, 2021.

According to your recent observations, is this problem becoming more acute in France?

D. C.: We have seen a radicalisation in the past five years. In 2017 the political landscape stretched from the extreme left to the extreme right and information circulated via the moderate parties like the PS, LREM and LR. Today, two new and highly influential extreme right online communities have emerged around Éric Zemmour and Florian Philippot. Philippot’s partisans have been especially active during the pandemic, which they used to generate hostility towards the government and the traditional parties. We have also observed that many online supporters of François Fillon and Marine Le Pen in 2017 are now present in Philippot and Zemmour’s digital communities. The latter has carried out recurrent coordinated actions on the social networks with the aim of creating polarisation.

In July 2021, the Kremlin Papers revealed the strategy adopted by Russia against the countries that imposed sanctions on it after the 2014 Ukrainian crisis. Will the new war in Ukraine intensify Russian intervention in Western social media?

D. C.: Since 2013, Russia has made a habit of intervening in the digital realm beyond its borders. This seems to be inspired by the writings of Sun Tzu, a Chinese strategist of the 6th century BC, who recommended not to make a distinction between peacetime and wartime, but rather weaken the enemy relentlessly on its own turf. Russia has been targeting the United States and Europe, seeking to widen the gap between citizens and their government by spreading false information, engaging in pro-Kremlin propaganda, and making political and business figures dependent on its cause by financing them or involving them in Russian enterprises. One notable example is François Fillon, who joined the administrative boards of several companies.

At the Internet research agency (IRA) in Saint Petersburg, several hundred employees spend their days disseminating fake news on the social networks. During the early stages of fighting in Ukraine, for example, they spread the rumour that the Ukrainian government had fled the country. Had this ploy really worked, the Ukrainian troops would not have fought with such fervour.

Are the online platforms better equipped today to combat this kind of disinformation?

D.C.: There are still problems because their data and algorithms can only be accessed by their own personnel. To oversee all the information concerning 2.8 billion Facebook users would require massive resources. Unless there is a legal or image risk, the company has no reason to make such a huge investment. Secondly, these platforms’ economic model is based on advertising and recommendations. Haugen revealed that Facebook changed its standards in 2018. Before then, the algorithm’s basic criterion was the amount of time spent on content. Now it’s engagement, in other words the number of clicks, comments and posts, which generates more profit despite the fact that cognitive biases make these environments more toxic. As long as their main parameter for the circulation of information is economic, the social networks will never be able to combat disinformation effectively.

You believe that the current Big Tech economic model, based on the commodification of social influence, is incompatible with the sustainability of democracy…

D. C.: Yes, quite – in particular due to one of the first theorems in the social sciences, the Heinz von Foerster-Dupuy hypothesis.2 Part of systems theory, this principle, interpreted in the context of human societies, tells us that as the quantity of social information increases, the collective dynamics gain momentum, grow in scale, and become less predictable. Large groups of people will, for example, try to buy the same thing or follow a populist leader. Another part of the postulate explains that if you have all the data on the behaviour of a population, you are more likely to accurately anticipate the direction of these collective movements when the level of social information rises. This is, for example, what enables Amazon to predict how well a book is likely to sell and adjust its logistics accordingly.

Since forecasting consumer behaviour generates income for the platforms, it is in their interest to become more ‘social’, which in turn makes human collectives less stable. Lastly, greater social information in a society reinforces ‘path dependency’, i.e. the fact that the first phase of a collective momentum strongly determines its subsequent direction. This gives enormous power to those who are capable of acting on a social system from the beginning of its dynamics, in particular opinion influencers. Do these mechanisms pose a threat to democracy?

D. C. : If the major digital platforms continue to make the economic factor their benchmark for controlling the circulation of information in our societies, democracy risks being obliterated by populist movements. The latter benefit from the ‘manipulability’ of our informational environment on the social networks and the weakness of our institutions. Examples include Jair Bolsonaro in Brazil and Viktor Orbán in Hungary. The risk is especially high in France, where the flaws in our presidential electoral process – just like in Brazil – make it possible for a radical candidate to get through to the second round of voting despite being rejected by a majority of the population.

As a result of the balkanisation of public opinion due to the effect of increasingly negative electoral campaigns, voters whose candidate does not make it to the second round can be sorely tempted to abstain or cast a populist vote. If the abstention rate is high enough, even a contender rejected by most of the population could get elected by securing a mere 20 to 30% of ballots.

How in your opinion can these phenomena be countered?

D. C.: I won’t list all options here, but in Toxic Data I propose 18 measures. First of all we can take individual action to ensure a healthy online life. As for collective initiatives, to mention only two, we must urgently change our electoral process, adopting for example the majority judgement method, which is much less prone to manipulation and makes it impossible for someone whom the majority rejects to be elected. It is also time to start regulating the digital platforms, considering that modifying one line of code on Facebook for example has an immediate effect on the daily lives of 2.8 billion people. I propose exploring the prospect of a law against the violation of democracy: if a platform deployed on a nationwide scale is capable of undermining social cohesion, it should be possible for their algorithms to be audited by an ad hoc authority and for adaptations to be imposed. It is totally feasible to formulate legislation that would allow private companies to participate in the circulation of social information while strengthening our electoral system and institutions against the manipulation of public opinion.

Further reading: Toxic Data. Comment les réseaux manipulent nos opinions (“Toxic Data, How networks manipulate our opinions” – in French), David Chavalarias, Flammarion, March 2022, 300 p., €19 (available in e-book format).

To find out more about Politoscope: https://politoscope.org

- 1. CNRS research professor and director of the ISC-PIF (Institut des Systèmes Complexes de Paris Île-de-France – CNRS).

- 2. Named after Heinz von Foerster, one of the originators of second-order cybernetics, and Jean-Pierre Dupuy, former professor at École Polytechnique, now teaching at Stanford University (California, US).

Explore more

Author

Specializing in themes related to religions, spirituality and history, Matthieu Sricot works with various media, including Le Monde des Religions, La Vie, Sciences Humaines and even Inrees.