You are here

The Ethics and Morals of Self-driving Cars

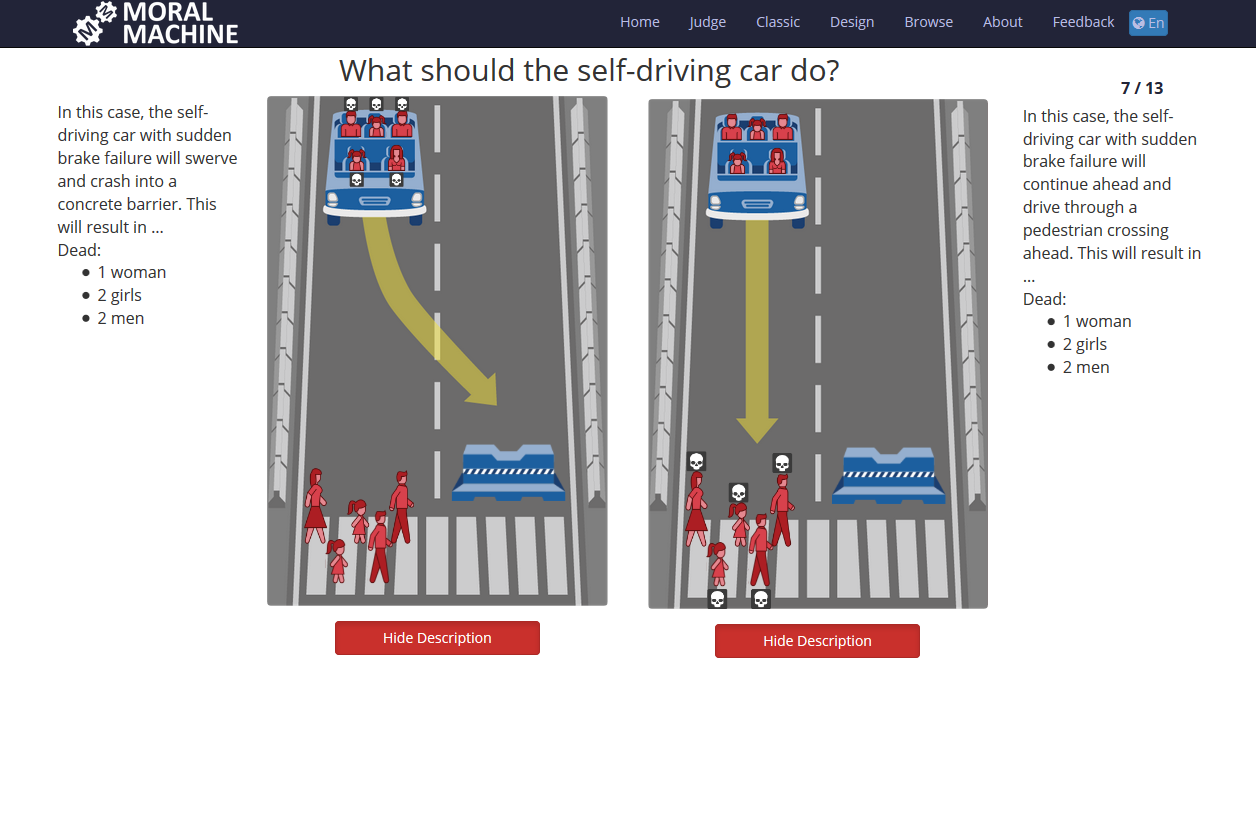

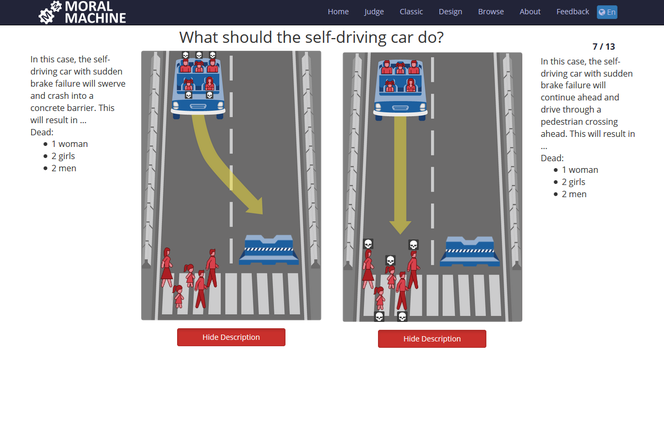

What type of risks are you willing to accept as a passenger in a self-driving car? For yourself, for other passengers and for people on the street? Given the choice, would you rather have a car hit five elderly pedestrians instead of a baby? A man instead of a woman? Should your car put your life in danger to save a pregnant woman, even if she is crossing against the light? We are working with researchers from MIT, Harvard, and the University of California1 on the Moral Machine, a simulator for surveying the moral preferences of people in situations where a self-driving car has to choose between two or more unavoidable accidents. Available in 10 languages, it associates nearly 4 million users and has already recorded 37 million responses from some 100 countries.2

Artificial intelligence in the hot seat

The ethical dilemmas in our simulations are not entirely new: they recall the well-known “trolley problemFermerA thought experiment in which a person can take an action that would benefit a group of individuals (A), but by so doing would harm another person (B). Under these circumstances, is it morally proper to take this action? Used in ethics and the cognitive sciences, this experiment was first described by Philippa Foot in 1967.,” a thought experiment originally proposed in 1967. It asks what a person should do when given the opportunity to save the lives of five people by switching a runaway trolley to another track, knowing that on the second track, it is certain to kill one person. Many philosophers have deemed it impossible to make a morally irreproachable decision in this situation and making no choice at all is a legitimate option within the confines of a thought experiment. Yet the trolley problem is sure to become reality when self-driving cars enter widespread use. Situations will arise in which a choice simply must be made.

The self-driving vehicle constitutes a unique case in artificial intelligence in terms of moral decision-making. Firstly because with self-driving cars, we are entrusting a machine to make decisions of a kind that we never make in a logical, reasoned way: until now, driver reactions in accidents have been a matter of reflex and unforeseeable impulse. Secondly, because other fields (e.g., medical and legal) give people time to accept or reject the machine’s decision, time that does not exist during an accident.

Different tasks, different risks

The purpose of the Moral Machine project is not to determine what is ethical or moral. Yet to us, it seems that before passing laws and allowing these cars to drive on our roads in massive numbers, both authorities and carmakers should know which solutions the public finds most socially acceptable.

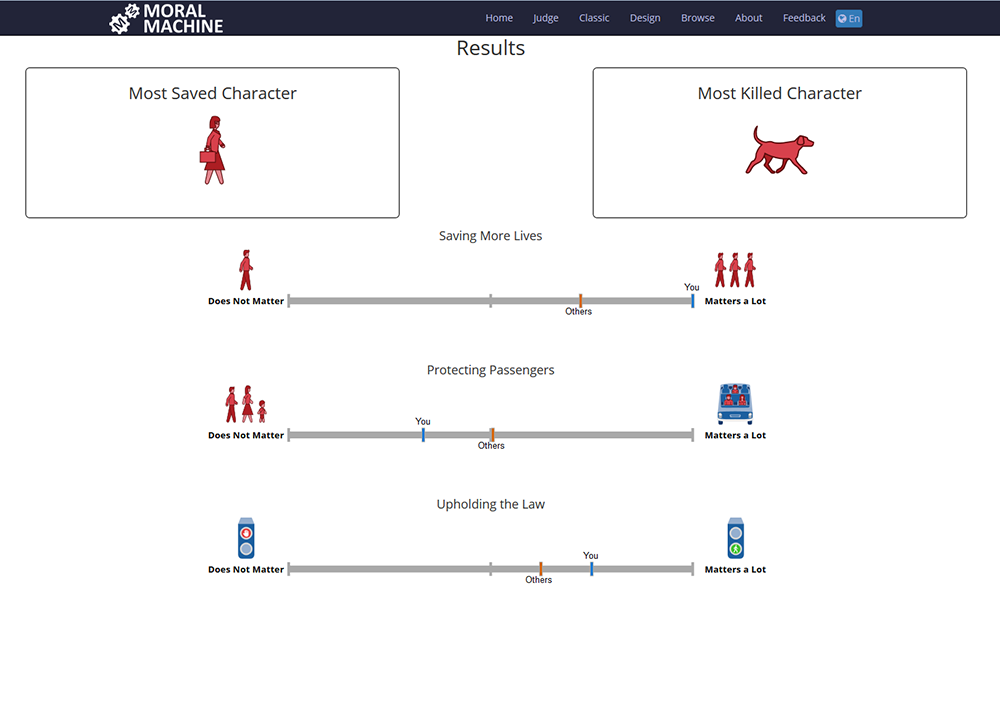

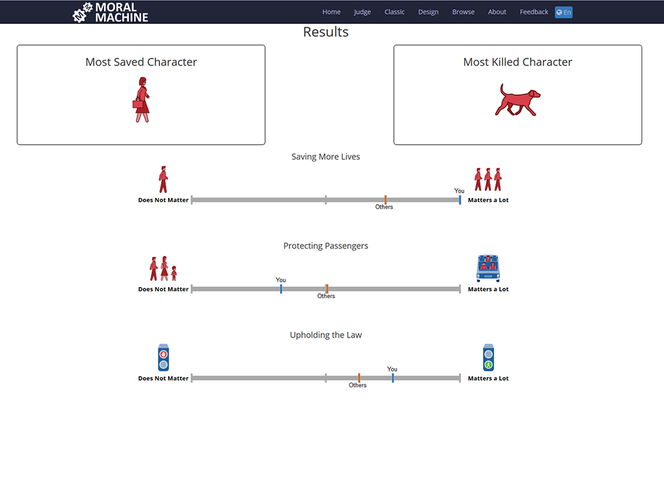

From our initial results, we already know that in the case of an unavoidable accident, the moral consensus is that it is best to minimize the number of deaths or injuries, whether among passengers or pedestrians. But when we ask consumers what kind of car they would want to buy, the vast majority prefer a vehicle that gives their own safety as a passenger priority over the safety of pedestrians.

This preference can lead to illogical behavior. For example, as a potential buyer of a self-driving car, one might focus too much on relative risk (the degree of risk for oneself compared with others in the case of an accident). Inversely, it’s easy to overlook the concept of absolute risk (the probability of an accident occurring in the first place). Significantly, self-driving cars could have as little as one-tenth the absolute risk of an accident as human-driven cars.3 The difficulty of integrating these two types of risk, and especially their respective importance, is problematic. Consumers could in fact be deterred by a higher relative risk (the fact that the car might not always protect them if faced with a dilemma), ignoring the fact that a self-driving car greatly reduces the probability of such a situation ever arising.

Errare humanum est…

To this difficulty, one must add that of sustaining user confidence in an autonomousFermerThe capacity for a machine endowed with artificial intelligence to reach a goal with no human intervention. However, the goal must be set in advance by a human programmer and the machine has no will of its own. system, knowing that the system will inevitably make some errors. People will quickly lose confidence in a machine that operates with artificial intelligence when it makes an error, no matter how small, whereas they easily pardon errors committed by other people.4 We forgive human errors because we presume that people will naturally make an effort not to repeat them. But if an algorithm makes an error, we tend to presume that it hasn’t been programmed correctly. Even though this rigid attitude is not always well-founded (since algorithms can in fact learn from their mistakes and improve with use), it will nonetheless be an important parameter to consider before the massive deployment of self-driving cars.5

Even if we succeed in clarifying the concepts of relative and absolute risk in the public mind, and dispelling preconceived notions about the capacities of algorithms, the debate on the ethics of self-driving cars will be far from resolved. This is why last September, the German transportation minister released a report by experts in artificial intelligence, law and philosophy concluding that it would be morally unacceptable for an automobile to choose whose lives it should save based on characteristics like age and gender. A child’s life should not take priority over an adult’s. This is all well and good — our role is not to question the ethical merits of this recommendation. But our Moral Machine project makes it possible to understand what people expect and what they are likely to find offensive. For example, according to our results, it is highly probable that there would be public outrage if a child were sacrificed to avoid risking the life of an adult. We must understand that even if these decisions are made based on perfectly respectable ethical criteria, they will nonetheless result in a number of accidents that will spark public outrage, compromising the perceived acceptability and adoption of self-driving cars. Our goal is to anticipate these phenomena of public opinion and evaluate their potential harm, and not to incite lawmakers to concede to people’s desires as revealed by our simulator.

The analysis, views and opinions expressed in this section are those of the authors and do not necessarily reflect the position or policies of the CNRS.

- 1. Edmond Awad, Jean-François Bonnefon, Sohan Dsouza, Joe Henrich, Richard Kim, Iyad Rahwan, Azim Shariff, Jonathan Schulz.

- 2. The Moral Machine project stems from our first publication on the topic: J. F. Bonnefon, A. Shariff, & I. Rahwan, “The social dilemma of autonomous vehicles,” Science, 2016. 352(6293): 1573-1576. http://science.sciencemag.org/content/352/6293/1573

- 3. According to the most optimistic projections, the large-scale deployment of self-driving vehicles could reduce the accident rate by 90%, because nine-tenths of today’s traffic accidents are caused by human error (driver fatigue, drunk driving, violation of traffic laws, etc.).

- 4. B. J. Dietvorst, J. P. Simmons, & C. Massey, “Algorithm aversion: People erroneously avoid algorithms after seeing them err,” Journal of Experimental Psychology: General, 2015. 144(1): 114-126. https://www.ncbi.nlm.nih.gov/pubmed/25401381

- 5. A.Shariff, J. F. Bonnefon, & I. Rahwan, “Psychological roadblocks to the adoption of self-driving vehicles,” Nature Human Behaviour, 2017. https://www.nature.com/articles/s41562-017-0202-6.epdf?author_access_tok...