You are here

AI's Next Move: Real-Time Strategy Games

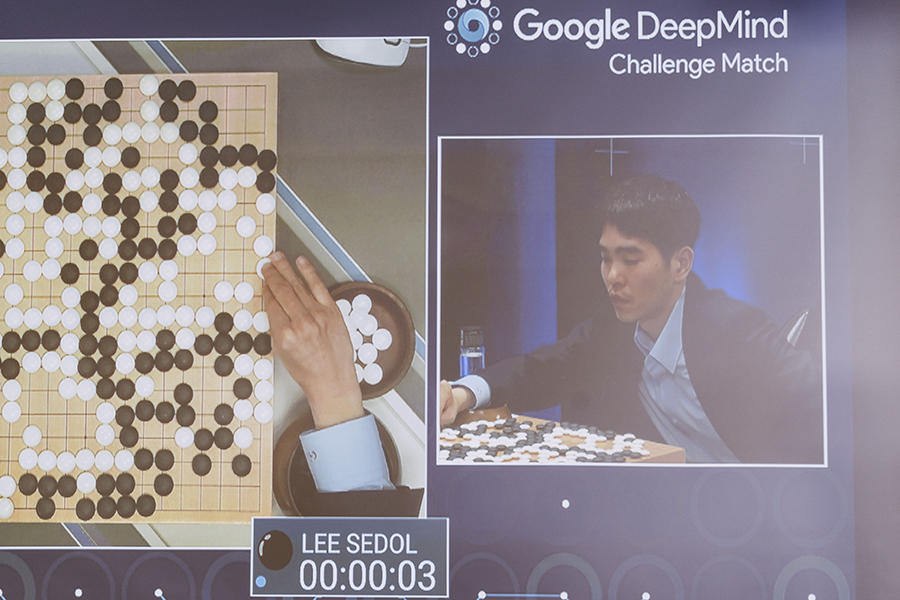

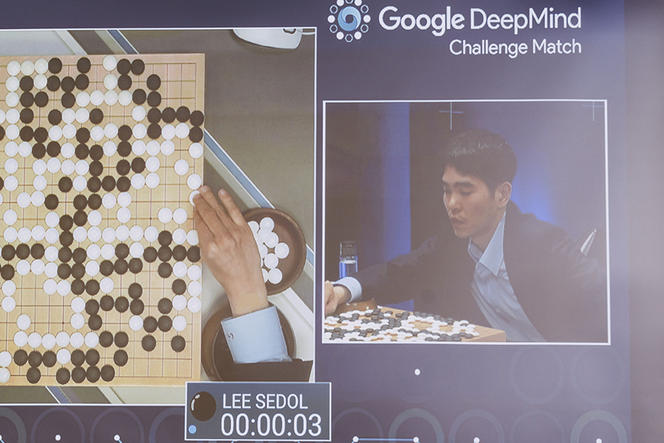

Behind its deceptively simple veneer, the game of Go offers such varied tactical choices that it seemed that artificial intelligence (AI) could never triumph. In March 2016, however, the fortress fell when AlphaGo chalked up a 4-1 victory over Lee Sedol, one of the world’s best Go players. Since then, the software has been improved, and in early January, it thrashed a panel of champions with 50 victories in a row. At the same time, another AI named Libratus, developed at Carnegie-Mellon University, trounced four pros at Texas Hold’em, a variant of poker.

Real-time strategy: a new challenge

AlphaGo was developed by DeepMind, an artificial intelligence company purchased by Google in 2014. Eager to conquer more territory, the company announced last November its plans to take on StarCraft II.1 In this highly popular strategy video game, players fight to the death to locate and exploit resources that will enable them to build bases and units. Multiple players can play at the same time, whether in teams or individually, although official competitions are played one on one. As with many video games, StarCraft II tournaments are organized, with cash prizes of up to $500,000. These competitions breed ferocious rivalry, and it is in this arena that DeepMind will select its opponents.

Compared with chess and Go, however, StarCraft II presents new challenges. First, it is played in real time and not in successive moves: all participants play simultaneously. The various official maps that make up the board are not divided into 200 squares, but offer instead a practically infinite range of different positions.

Players are confronted with the “fog of war,” which restricts their knowledge of their adversaries’ actions and positions to the actual field of vision of their own units. Finally, players can belong to one of three completely different races—this amounts to having a chess game where white and black uses entirely different sets of pieces.

How Go was beaten

To overcome human Go players, the company turned to Monte Carlo tree search and deep learning. “The Monte Carlo tree search method is based on the construction of a tree comprising states of a given problem close to the situation to be evaluated and on statistics gleaned from random games. The next moves to be played draw on the results of previous random games,” explains Tristan Cazenave, lecturer at the University of Paris-Dauphine and member of LAMSADE.2

Deep learning involves networks of algorithms, inspired by neurons, that successively break down the tasks at hand. The first layers are concerned with identifying very simple fragments of situations, while the final layers provide an overall interpretation of the position. AI first studies games played by high-level competitors then plays against itself in partly random fashion. By repeating the operation millions of times, the AI eventually has access to solid statistics that it can use to adapt its approach and make good moves. Therefore, the researchers do not push particular strategies, but simply gives the AI the means to identify the best possible strategies on its own.

“These forms of artificial intelligence have a great many applications,” continues Cazenave. “The algorithms used in Go, for example, are the same as those used for image recognition. The increase in machine power and improvements in algorithms account for the significant headway made recently.”

From deep learning to heuristics

The fact remains that while this method worked for overcoming the game of Go, which is played on a board containing 361 positions on which a piece can be placed, the maps in StarCraft II have approximately 150x150 positions. This means that there are some 22,500 possibilities for each unit, of which each player can hold up to a maximum of 200. The number of options has expanded almost exponentially, and herein lies the main challenge for AI.

In fact, the StarCraft saga has long been used as a testing ground, even before the idea of beating human players was conceived. Since 2010, the AIIDE3 StarCraft AI Competition has enabled researchers and devotees to pit their artificial intelligence systems against one another in Brood War, a predecessor of StarCraft II. “Until now, no one has used deep learning in these competitions,” indicates David Churchill, Assistant Professor of Computer Science at the Memorial University of Newfoundland and organizer of the event since 2011. “My own research focuses on heuristic search, which is better suited to real-time environments.”

Heuristic approaches to a problem provide approximations of the potential cost of each possible solution and gradually eliminate the least effective ones. By storing these fixed rules, the programmer can retain those choices that are in theory of greatest interest. Although this method sacrifices the power of deep learning, it dispenses with the long required training time. Furthermore, video game producers do not use deep learning when developing new games.

Giving players a chance

“We’re looking to entertain players, to give them the impression that they are up against generals from other countries,” says Carl Carenwall, AI programmer of Hearts of Iron IV, the most popular WWII strategy game. “Our AI has to make decisions that make sense in terms of actual history. You can’t have Imperial Japan behaving like Free France, for example. We don’t use deep learning for this and we prefer to keep a rein on AI so as to give it a specific personality.” A similar objective to DeepMind projects. Carenvall considers that Google and Facebook are interested in RTS games not only to make their algorithms more effective, but also to make their results and interactions feel less cold and dehumanized to their end users.

But what do the actual players think? PtitDrogo, the avatar name of Théo Freydière, was the only French player to ever win in 2016 a StarCraft II Premier Tournament, the most prestigious category. He feels that an AI victory over humans in this game is quite possible. However, since StarCraft II is played in real-time, PtitDrogo thinks that it is essential to limit the number of actions per minute (APM) that can be performed by AI.

“While a pro player can manage 300 APM, a computer can easily exceed 3000,” he explains. “If it can individually control 50 units at the same time, it will be able to beat us effortlessly just by virtue of its superior speed.” DeepMind has announced that the APM of its AI system will indeed be limited, since the main goal is to improve the algorithms. Florian Richoux, lecturer at the University of Nantes and member of LS2N,4 is well aware of this. He has also contributed to TorchCraft, a framework5 linking the Brood War interface with the Torch deep-learning library. This is now a Facebook open source project. “Today, there is no AI system capable of playing an entire game of StarCraft using deep learning,” he points out. “For the moment, TorchCraft is used only in some problems such as the handling of small groups of units but without any precise overall strategy.”

However, Richoux has not ruled out the possibility that DeepMind could be springing a few surprises, with the company promising to unveil its initial results in early 2017. Regarding the interest of Facebook and Google, who do not produce video games, he concludes that “only a tiny section of the research community is working on AI to improve games. For the vast majority of these researchers, games simply offer an extremely practical and highly publicized testing ground.”

- 1. By convention and for the sake of simplicity, StarCraft II is taken to mean the basic game of StarCraft II: Wings of Liberty, released in 2010, and which has been supplemented by the two official expansion packs, Heart of the Swarm and Legacy of the Void.

- 2. Laboratoire d’analyse et modélisation de systèmes pour l’aide à la décision (CNRS/Université Paris-Dauphine).

- 3. AI for Interactive Digital Entertainment.

- 4. Laboratoire des Sciences du Numérique à Nantes (CNRS/École Centrale de Nantes/Université de Nantes/Institut Mines-Telecom).

- 5. A framework provides a foundation for software development. Here, it avoids programmers interested in the subject having to start from scratch.

Explore more

Author

A graduate from the School of Journalism in Lille, Martin Koppe has worked for a number of publications including Dossiers d’archéologie, Science et Vie Junior and La Recherche, as well the website Maxisciences.com. He also holds degrees in art history, archaeometry, and epistemology.