You are here

Through Nina’s Eyes

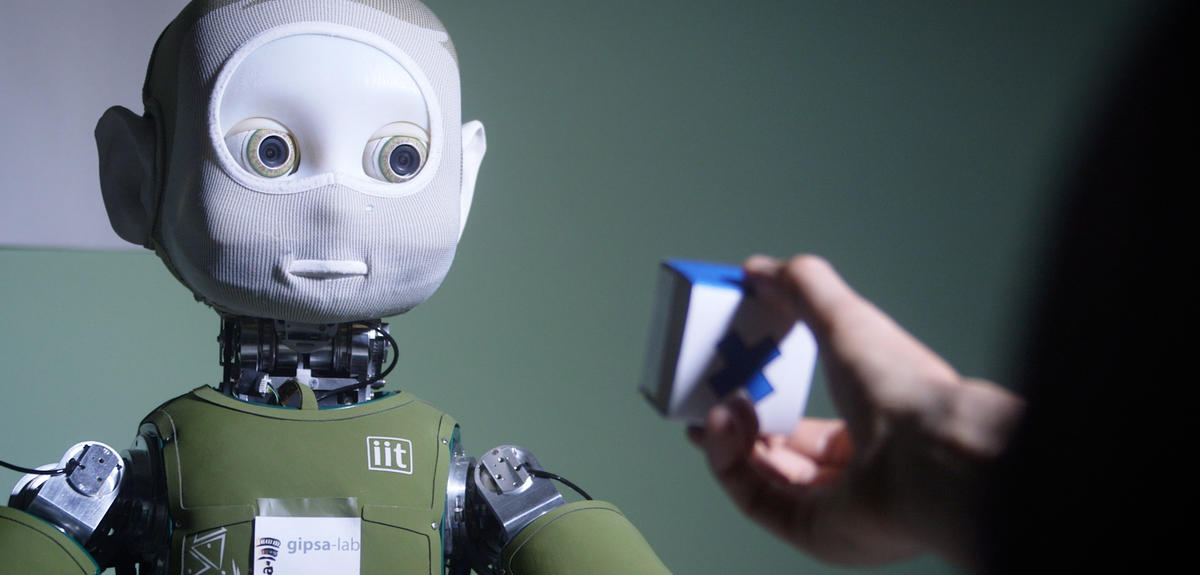

Made of steel, small motors, and an electric brain, this is Nina, and she’s learning as fast as she can.

Nina, a humanoid robot, lives at the GiPsa Lab in Grenoble, where scientists are working to improve communications between humans and the robots that are increasingly finding their way into our daily lives.

For the purposes of this study, Nina was given eyes, a mouth, lips and even ears to facilitate exchanges with her human counterparts. Although human faces are comprised of over 100 muscles, Nina’s has only 10 motors.

The team at Gipsa Lab is working to decrypt nonverbal communication and synchronize words with the movements that constitute the foundations of face-to-face interaction.

Gérard Bailly

Obviously, verbal communication is an audio signal transmitted from my lips to your ears. But there’s also a collection of gestures, looks and facial expressions combined with head movements that help get the message across. This is what we call co-verbal communication. And of course there’s non-verbal communication as well, like posture, the angle of the face, etc which are influenced by the person I’m addressing but also speak more generally to my emotional and physical states, my age, health, and so on. When combined, these signals make up the foundations of face-to-face human interaction. So our goal is to take these forms of expression, and the way we decode them, and impart that understanding to our robot.

After studying the mechanics of synchronizing movements to words, the scientists created a test that takes the form of a child’s game. Placed opposite a human, Nina becomes a teacher and asks her partner to take a colored cube and move it. Working towards a goal, watching her partner, and using intonation allows Nina to improve her gestures and overall capacity to adapt when faced with complex situations.

Frédéric Elisei

The idea is to incorporate the fundamentals of social interaction into Nina’s functioning. Robots on assembly lines in factories are already doing repetitive tasks in pursuit of a goal. What we would like is for our robot to be able to approach us in nuanced yet repeatable manners, in other words accomplish the repetitive tasks of social interaction. We hope that the robot can look at us when it needs to, assess goals within an environment, and identify them in a way that feels natural. The robot itself isn’t the end game for us, but rather another tool to better understand our fellow humans.

To help Nina seem as human as possible, the researchers must get inside her head. Literally. From a control centre nextdoor, an operator wearing a connected headset can teach her in real time how to behave during a conversation. This immersive visual experience is made possible thanks to the cameras installed in Nina’s eyes and the microphones in her ears.

- Ambiance Gerard (Do we want Nina as well?)

Hello, I’m Nina, the humanoid robot of the Gipsa lab and I’m currently being operated by Gérard Bailly.

The robot then replicates the movements of the operator’s head.

His eyes are tracked by his headset and mirrored by the cameras in Nina’s eyes.

This assisted learning is creating a database of behaviours that Nina will use as she confronts different situations.

Frederic Elisei

This dAta will enable us to teach other robots in the future, and demonstrate that a dAta-oriented task can be translated into things like dialogue, manipulating objects on a table, or even dealing with multiple people ; ultimately how the robot should behave. Just as we teach children to watch and mimic adults, one could say that this robot isn’t intelligent or autonomous, but is passively learning behaviors that will teach it to use its body.

Could Nina be the blueprint of intelligent behavior for the machines of tomorrow?

As a tool, she remains a research platform with no function beyond these walls. However, the data compiled will be precious for roboticists, who are continually working to create robots better adapted for human interaction.

Robots that may one day master even the most subtle elements of human speech.

Meet Nina, the Social Robot

If we are to one day communicate naturally with robots, they will need to master not only speech, but also the subtle yet essential rules of non-verbal communication such as posture and eye movement. A team of researchers is using machine-learning techniques to teach those skills to Nina, their humanoid robot.

Frédéric Elisei (CNRS)

Duc Canh Nguyen (PhD student)

Grenoble Images Parole Signal Automatique (GIPSA-lab)

CNRS / GRENOBLE INP/ Université GRENOBLE ALPES