You are here

Mind-reading Technology Moves Forward

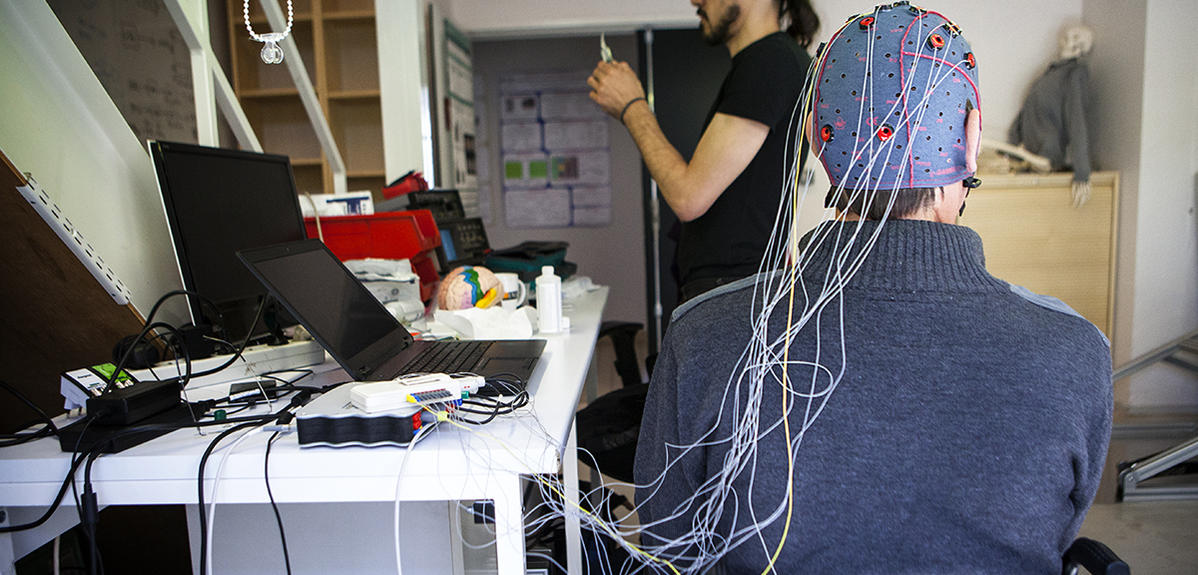

Body movement is second nature to most people, requiring no conscious thought or effort. Not so to others like amputees, stroke victims or elderly persons with reduced motor skills, for whom science has nonetheless developed the solution of brain-computer interfaces (BCIs). As the term suggests, BCIs hook the brain up to a computer device operable by neuronal activity rather than physical manipulation. This is widely done via electroencephalography (EEG), which records the neural firings in the brain through electrodes placed on the scalp. As a result, users can accomplish tasks like moving a wheelchair, artificial limbs or even the entire body.

While researchers started to bring this science-fictionesque (think Avatar and other blockbusters) concept to life several decades ago, we are still some distance off from what the big screen displays. A distance now markedly reduced by a ground-breaking new method for deciphering the movements users wish to make—experimentally shown to offer unprecedented ease and precision, virtually in real time.1

Technology has given birth to numerous aids for the motor-impaired—wheelchairs for quadriplegics, for example, or prosthetic limbs for amputees. However, use of these aids still meets a fundamental challenge. “How do we know where wheelchair-users want to go? Or when and how amputees want to move their limbs?” asks neuroscience and robotics specialist Gowrishankar Ganesh of the JRL.2 One way for this, he suggests, “is to directly read the brain. The dream goal is a BCI that enables the control of an artificial limb or machine with as much ease as using one’s own body.” This objective has pushed researchers to come up with a range of methods ever since the term “brain-computer interface” was coined in 1976. But generally, their downfall lies in the huge cognitive efforts that users need to input, thus making them unsuitable for some. Whether these methods are “active,” requiring the user to concentrate and consciously emit certain brain signals, or “reactive,” in that they exploit external stimuli to trigger involuntary brain signals, they place a mental strain on the beneficiaries, who often need to undergo extensive training to get systems to work.

This has prompted Ganesh and his colleagues from several institutions in Japan—the Tokyo Institute of Technology, Osaka University and National Institute of Advanced Industrial Science and Technology—to pool their knowhow. And rather than taking the path trodden by existing methods that attempt to decode the movement the user intends, the team tried something different. Their innovative strategy is based on so-called “sensory prediction errors.” Ganesh explains that “whenever we move, or even just think about moving, the brain unconsciously makes predictions about what’s going to happen next in the environment and anticipates the sensory feedback that will come.”

Basing themselves on “the known importance of errors between sensory prediction and actual sensory information for motor processes in the brain,” the researchers hypothesized that strong brain signatures—in other words, neural activity patterns—detectable by EEG, must exist for prediction errors. In this way, the team came up with a BCI system that “sends the brain a sensory stimulation, then—instead of decoding what the user is thinking—decodes whether the brain detects an error or not. As we know which stimulation we send, we can then work out what the user wants.”

To test the method, twelve seated experiment participants were asked to imagine turning either right or left in a wheelchair while a galvanic vestibular stimulator sent electrical stimulations to the nerve in the ear responsible for physical balance, thus creating a subliminal (not consciously perceived) sensation of movement in one of these directions. Brain signals formed in response were then deciphered to figure out whether the stimulated direction matched the one the user had in mind. Impressively, this approach reaped an accuracy rate of 87.2 %, with decoding complete within a trifling 96 milliseconds of the signal. Above all, the method—using a safe, compact portable device—fulfills the aim of an effort-free solution as zero training is required from users whose brains are stimulated with no conscious effort on their part. Ganesh also observes that “this approach is entirely different from previous ones, so it can be used complementarily.” Having successfully tested their prototype in lab conditions, the team in Japan is now moving to the next stage of trialing the system on actual wheelchair users.

Importantly, while the prototype only offered the choice between turning right and left, the method’s potential in fact stretches far wider. Ganesh points out that “in our experiment, we focused on the turning movement, which is why we used a vestibular stimulator that acts on the part of the brain associated with turning. But we can explore other movements by using other types of stimulators. Potentially there are no limits on the number of directions that can be tested by a mind-reading device.” And the team’s future projects are headed in new directions. For starters, the researchers intend to fine-tune the method’s already-striking precision. “In our experiment,” the JRL researcher notes, “we stimulated the user only once. We’re now looking at performing multiple stimulations to increase the accuracy rate.”

Otherwise, the team also plans to deepen understanding about how prediction errors are signaled by the brain. “We want to find out more about the timing, region and frequency of signals associated with prediction errors to define their brain signature,” says Ganesh. “Ultimately, this could help us to diagnose social diseases that might be characterized by prediction errors.”

Finally, while the team’s study started off with a focus on motor decisions, the scientists, in partnership with other collaborators, are now “expanding the method to decision-making situations where the answer is ‘yes’ or ‘no.’” In particular, studies are underway on developing a means to communicate with sufferers from the Locked-In syndrome, typically mentally aware but unable to move or speak due to their loss of muscle control. The first results, Ganesh reports, are “promising.”

Explore more

Author

As well as contributing to the CNRSNews, Fui Lee Luk is a freelance translator for various publishing houses and websites. She has a PhD in French literature (Paris III / University of Sydney).